The Rise of Small Language Models

Understanding LLMs and SLMs

What are Large Language Models (LLMs)?

Large Language Models (LLMs) are neural networks trained on vast datasets, with billions of parameters, enabling them to perform complex language tasks. These models excel in a variety of areas:

- Text Generation: LLMs create coherent and contextually relevant content, from blog posts to creative stories.

- Translation: They translate text from one language to another with remarkable accuracy.

- Summarization: They can condense lengthy texts into digestible summaries, making them useful for research and reporting.

The sheer size and diversity of data that LLMs are trained on make them incredibly versatile, and capable of handling a wide range of topics and style.

What are Small Language Models (SLMs)?

Small Language Models (SLMs) are designed with fewer parameters, typically in the millions, making them compact and efficient. While they don’t match the scale or power of LLMs, they come with a unique set of advantages:

- Efficiency: SLMs require less computational power, making them easier to deploy in real-time applications.

- Faster Response Times: Due to their smaller size, SLMs deliver faster results, which is crucial for time-sensitive applications.

- Cost-Effectiveness: With lower operational costs, SLMs democratize access to language modelling, allowing startups and smaller businesses to leverage AI without hefty investments.

The Comparative Landscape: LLMs vs. SLMs

Performance: The LLMs excel at understanding complex language patterns and generating nuanced responses, SLMs can still perform well on many specific tasks, particularly when fine-tuned on targeted datasets. For instance, an SLM fine-tuned for customer support can provide accurate answers in that domain, even if it lacks the general prowess of an LLM.

Accessibility: The resource demands of LLMs often restrict their usage to larger corporations with substantial computational resources. SLMs democratize access to language technologies, allowing startups, researchers, and individual developers to harness AI for innovative solutions without a hefty investment in infrastructure.

Feasibility: LLMs are well-suited for applications that require deep understanding, such as creative writing, in-depth research, and complex dialogues. SLMs, conversely, shine in applications where speed and efficiency are paramount.

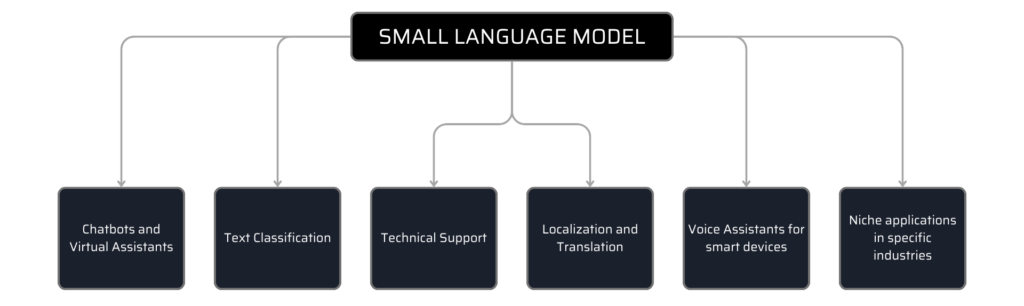

Application of SLMs in technology and services

Common use cases for SLMs

- Chatbots and Virtual Assistants: SLMs can power customer service chatbots that provide quick responses to frequently asked questions, helping users with basic inquiries without the need for extensive computational resources.

- Text Classification: Small language models are particularly well-suited for tasks like sentiment analysis and categorization of text. Sentiment analysis involves determining whether a piece of text—such as a customer review, social media post, or feedback form—expresses a positive, negative, or neutral sentiment.

- Technical Support: SLMs can assist in providing troubleshooting steps or FAQs for software and hardware issues, enabling users to resolve common problems efficiently.

- Localization and Translation: For specific industries or regions, SLMs can offer localized translation services that require less computational power while maintaining relevance to local dialects and terminologies.

- Voice Assistants for Smart Devices: In IoT applications, SLMs can enable voice commands for smart home devices, facilitating fast, responsive interactions that require less computational power than larger models.

- Niche Applications in Specific Industries: SLMs can be fine-tuned for specific sectors like healthcare, legal, or finance, providing tailored insights and information relevant to those fields without the overhead of larger models.

Why SLMs Will Be Needed in the Future

- Demand for Efficiency: As AI continues to infiltrate real-time, customer-facing applications, the demand for fast, efficient models will only increase. SLMs fit this need perfectly by delivering quick responses while consuming fewer resources.

- Data Privacy and Security: With increasing data privacy concerns, many organizations prefer localized/small AI models that operate on-premises without relying on cloud-based solutions. SLMs can be trained on specific, sensitive datasets, for localised processing reducing the need for extensive cloud resources and mitigating the risk of data breaches.

- Tailored Solutions: Industries like healthcare, legal, and finance are becoming more specialized, and the need for tailored language models is growing. SLMs allow fine-tuning on niche datasets, providing highly relevant outputs. This specialization can lead to improved performance in targeted tasks.

- Eco-Friendly AI: The environmental impact of large AI models cannot be ignored. Training and operating LLMs require substantial energy, contributing to carbon footprints. SLMs, with their lower resource demands, present a more sustainable alternative for organizations aiming to reduce their environmental impact.

Advantages of Small Language Models

- Resource Efficiency: SLMs require significantly less memory and processing power, making them ideal for devices with limited capabilities such as smartphones and IoT devices.

- Speed: Faster response times make SLMs perfect for customer service and real-time data processing applications.

- Cost-Effectiveness: Lower computational and operational costs make SLMs more accessible for small businesses and startups.

- Customization: SLMs can be easily fine-tuned for specific tasks, allowing for enhanced performance in targeted applications.

Disadvantages of Small Language Models

- Limited Understanding: SLMs might lack the nuanced language understanding that LLMs offer, which can lead to less coherent or relevant responses.

- Task-Specific Performance: SLMs are excellent for narrow, focused tasks but may struggle to generalize across diverse contexts.

- Training Challenges: Fine-tuning SLMs requires high-quality niche datasets, which can be a challenge for organizations without access to such data.

- Scalability: SLMs may not handle large-scale deployments as effectively as LLMs, especially when there is a sudden surge in demand.

To summarise, the rise of SLMs is changing the AI landscape. While LLMs will continue to dominate deep language understanding, SLMs offer distinct advantages in efficiency, speed, cost, and eco-friendliness for real-time applications in various industries. As businesses prioritize tailored solutions, data privacy, and environmental sustainability, SLMs are poised to carve out their niche. The future of AI will likely see a blend of large and small models working together, with Small Language Models playing a pivotal role in democratizing access to advanced language technologies. In an era where innovation requires balancing performance with efficiency, Small Language Models offer a promising path forward for businesses of all sizes. As AI continues to evolve, these compact models will likely lead to a new wave of accessible and sustainable AI solutions.

At MosChip Technologies, we specialize in leveraging AI solutions to empower enterprises to harness their full potential of AI across cloud, edge, and generative AI with deep expertise in LLMs and SLMs. Our end-to-end services, from R&D to development, deployment, and testing help drive efficiency, improve decision-making and unlock new opportunities, ensuring AI solutions to make an impact.