Versal ACAP architecture & intelligent solution design

Overview

Xilinx’s new heterogeneous compute platform, Versal Adaptive Compute Acceleration Platform (ACAP), efficiently combines the power of software and hardware programmability. Versal ACAP devices are used for a wide range of applications such as data center, Wireless 5G, AI/ML, A & D Radars, Automotive, and wired applications.

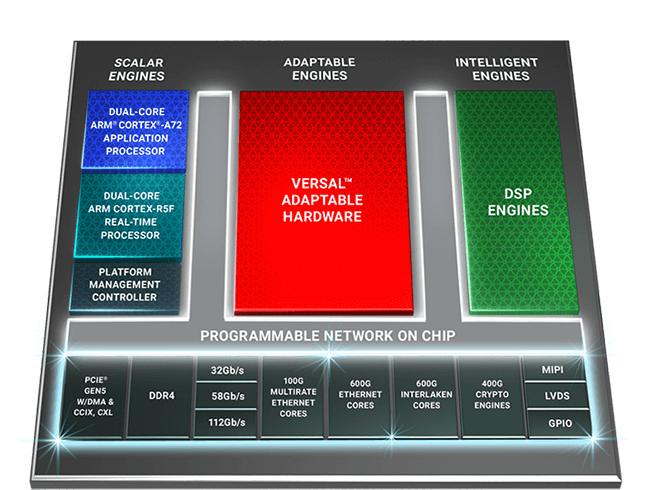

Hardware Architecture

Versal ACAP is powered by scalable, adaptable, and Intelligent engines. On-chip memory access for all the machines is enabled via network on chip (NoC).

Source: Xilinx

Scalar Engines

Scalar engines power platform computing, decision making, and control. For general-purpose computing, dual-core ARM cortex- A72 Application Processing Unit (APU) is used in versal. APU supports virtualization allowing multiple software stacks to run simultaneously. The dual core ARM cortex R5F Realtime Processing Unit (RPU) is available for real-time applications. RPU can be configured as a single/dual processor in lockstep mode. RPU can be used for variety of time-critical applications, e.g., safety in the automotive domain.

Platform Management Controller

Platform Management Controller (PMC) is responsible for boot, configuration, partial re-configuration, and general platform management tasks, including power, clock, pin control, reset management, and system monitoring. It is also responsible for device life cycle management, including security.

Adaptable Engines

The adaptable engines feature the classic FPGA technology – the programable silicon. Adaptable engines include DSP engines (Adaptable), configurable logic blocks (Intelligent), and two types of RAM (Block RAM and Ultra RAM (adaptable)). Using such a configurable structure, users can create any kind of accelerator for different kinds of applications.

Intelligent Engines

AI engines are software programable and hardware adaptable. They are an array of VLIW SIMD vector processors used for ML/AI inference and advanced signal processing. AI engine is tile-based architecture. Each tile is made of a vector processor, scaler processor, dedicated program and data memory, dedicated AXI data movement channels, DMA, and locks.

Network on Chip

Network on Chip (NoC) makes Versal ACAPs even more powerful by connecting all engines, memory hierarchy, and highspeed IOs. NoC makes each hardware component and soft IP modules accessible to each other and the software via a memory-mapped interface.

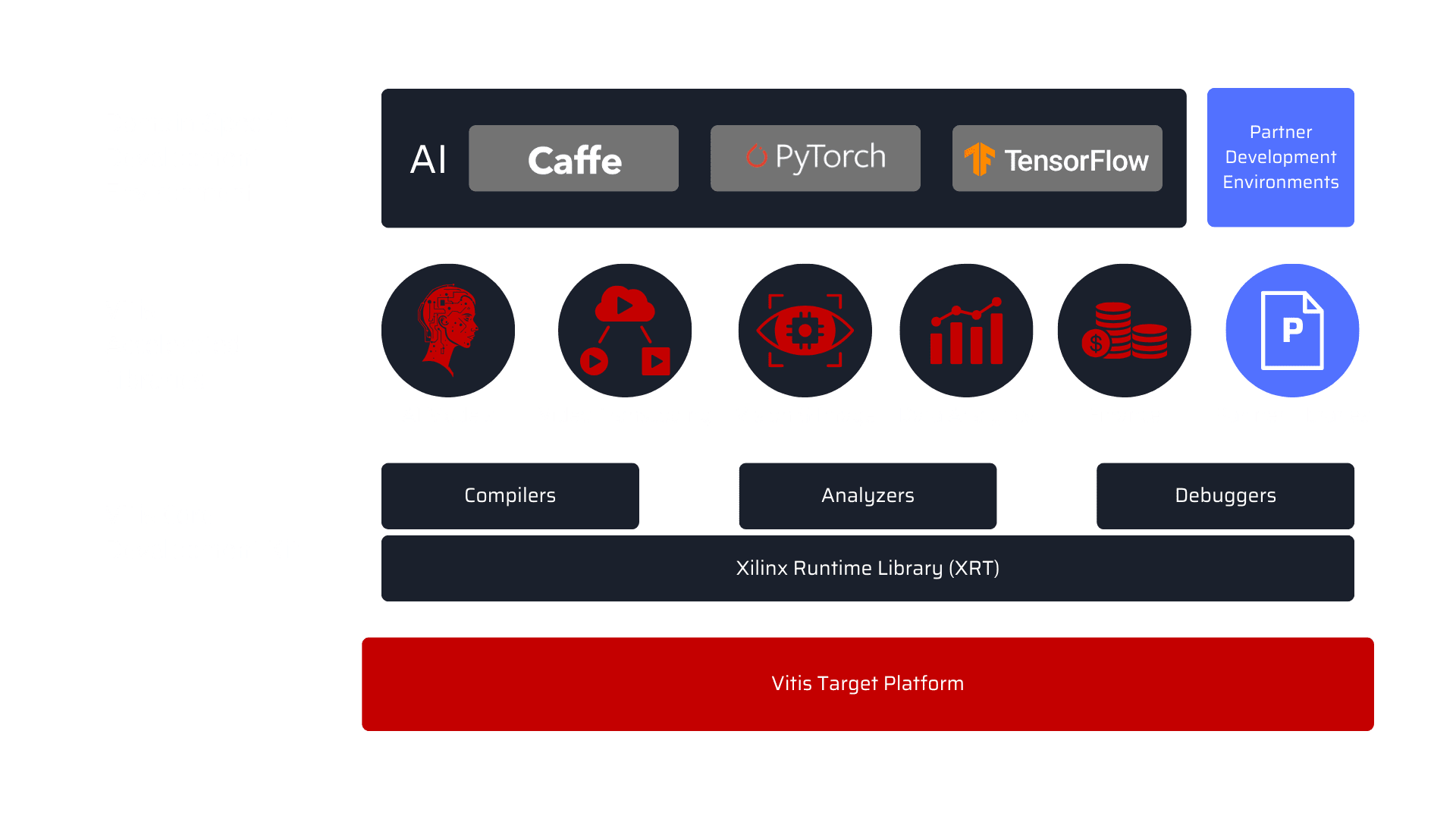

Software Support

Xilinx introduced Vitis – A unified software development platform that enables embedded software and accelerated applications on heterogeneous Xilinx platforms, including FPGAs, SoCs, and Versal ACAPs.

The Vitis unified software development platform provides sets of open-source libraries, enabling developers to build hardware-accelerated applications without hardware knowledge. It also provides Xilinx Runtime Library (XRT), including firmware, board utilities, kernel driver, user-space libraries, and APIs. Vitis also provides an AI development environment including a deep learning framework like TensorFlow, PyTorch, and Caffe, and offers comprehensive APIs to prune, quantize, optimize, debug, and compile trained networks to achieve the highest AI inference performance.

Source: Xilinx

MosChip have a wide range of expertise on various platforms including vision and image processing on VLIW SIMD vector processor, FPGA design development, Linux kernel driver development, Platform and Power Management Multimedia development.

MosChip is developing high-performance Vision & ML/AI solutions using Versal ACAP by utilizing high bandwidth and configurable NoC, AI engine tile array in tandem with DMA and interconnect with PL. Versal’s high bandwidth interfaces and high compute processors can improve performance. One such use-case that MosChip is already developing Scene Text Detection solution using Vitis AI & DPU.

The Scene Text Detection use-case demands high power compute for LSTM operations. Our AI/ML engineers’ team evaluates their design to leverage the custom memory hierarchy and the multicast stream capability on AI interconnect and AI-optimized vector instructions to gain the best performance. With a powerful AI Engine DMA capability and ping-pong buffering of stream data onto local tile memory, the ability of parallel processing opens a plethora of optimized implementations. Direct memory access (DMA) in the AI Engine tile moves data from the incoming stream(s) to local memory and from local memory to outgoing stream(s). Configuration interconnect (through memory-mapped AXI4 interface) with a shared, transaction-based switched interconnect provides access from external masters to internal AI Engine tile.

Further, cascade streams across multiple AI Engine tiles allow for greater flexibility in design by accommodating multiple ML inference instances. Along with the deep understanding of Versal ACAP memory hierarchies, AI Engine Tiles, DMA, and parallel processing, MosChip’ extensive experience in leading ML/AI frameworks TensorFlow, PyTorch, and Caffe aids in creating end to end accelerated ML/AI pipelines with a focus on pre/post-processing of streams and model customization.

MosChip has also been an early major contributor in Versal ACAP Platform Management related developments. Some of the key contributions in this space involve developing software components on Versal, such as platform management library (xilpm), Arm Trusted Firmware, Linux device drivers, u-boot for Platform Management.

Through our hands-on experience on Versal ACAP for AI/ML, Machine Vision & Platform Management, MosChip can help customers take their concepts to design & deployment in a seamless fashion.

About MosChip:

MosChip has 20+ years of experience in Semiconductor, Embedded Systems & Software Design, and Product Engineering services with the strength of 1300+ engineers.

Established in 1999, MosChip has development centers in Hyderabad, Bangalore, Pune, and Ahmedabad (India) and a branch office in Santa Clara, USA. Our embedded expertise involves platform enablement (FPGA/ ASIC/ SoC/ processors), firmware and driver development, BSP and board bring-up, OS porting, middleware integration, product re-engineering and sustenance, device and embedded testing, test automation, IoT, AIML solution design and more. Our semiconductor offerings involve silicon design, verification, validation, and turnkey ASIC services. We are also a TSMC DCA (Design Center Alliance) Partner.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube

Author

-

Ranganathan comes with about 23 years of experience in Product Engineering Services through Embedded Systems Design & Development across Defense, Automotive, Industrial & Consumer Electronics and working on a wide gamut of technologies including Embedded Systems, Multimedia, In-Vehicle Infotainment, System Software Development & Real-time Operating Systems towards creating Product Engineering Solutions. Technical leader responsible for creating the vision and development of Digital Transformation Solutions. Driving a strong team in FPGA design, Embedded Software, Platform Management, Multimedia & AI Technologies to create industry-standard & custom solutions using a multitude of technologies including IoT, Artificial Intelligence, Machine Vision, Audio/Video Processing, Graphics, Data processing & virtualization. Has been delivering solutions and highly integrated, mission-critical systems and applications to leading global design houses & OEMs.