How Computer Vision propels Autonomous Vehicles from Concept to Reality?

The concept of autonomous vehicles is now becoming a reality with the advancement of Computer Vision technologies. Computer Vision helps in the areas of perception building, localization and mapping, path planning, and making effective use of controllers to actuate the vehicle. The primary aspect is to understand the environment and perceive it by using the camera to identify other vehicles, pedestrians, roads, pathways and with the use of sensors such as Radar, LIDAR, complement those data obtained by the camera.

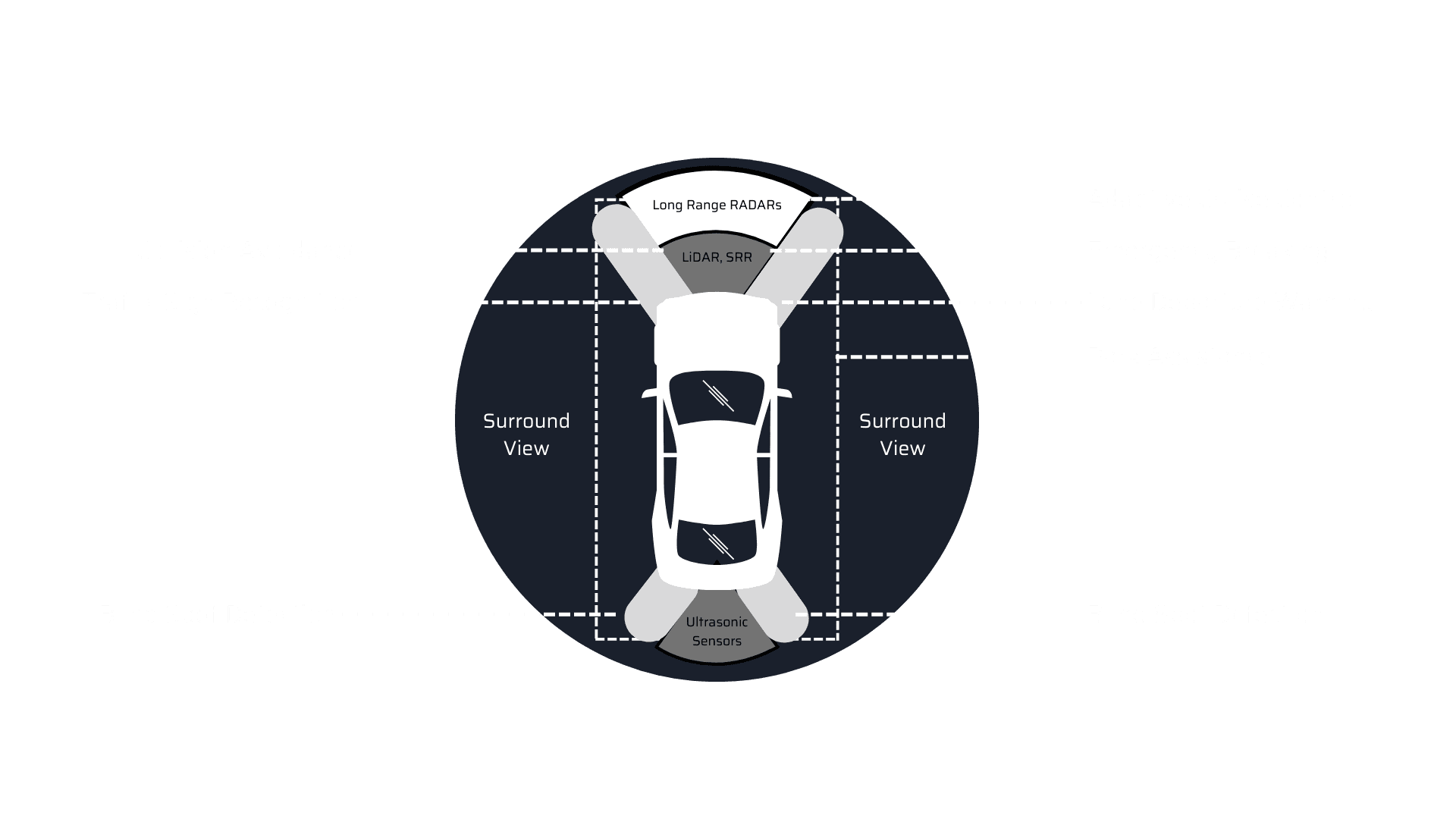

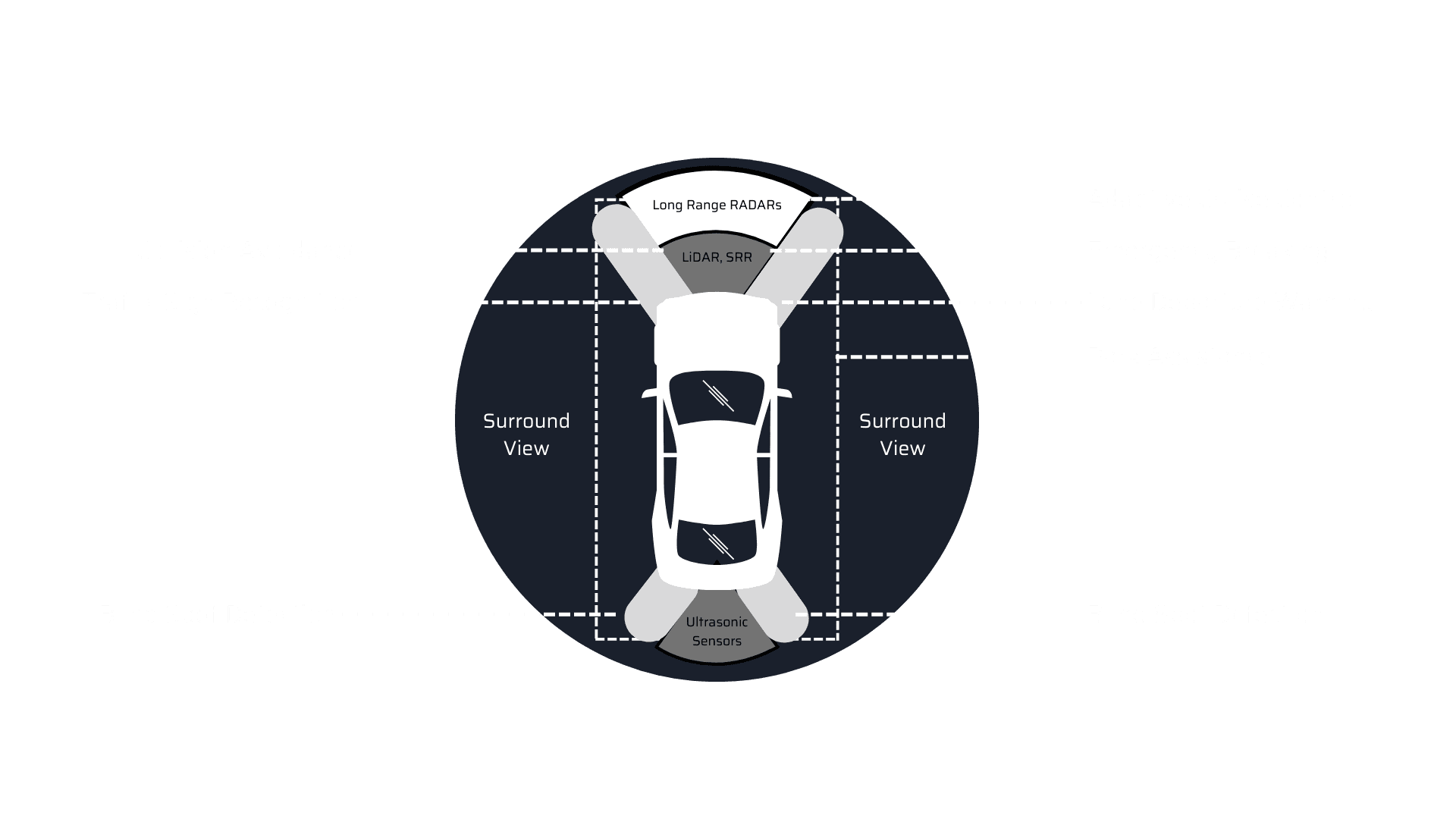

Histogram of oriented Gradients (HOG) and classifiers for object detection got a lot of attention with the use of machine learning. Classifiers train a model for the identification of shape by examining its different gradients and HOG retains the shapes and directions of each pixel. A typical vision system consists of near and far radars, front, side, and rear cameras with ultrasonic sensors. This system assists in safety-enabled autopilot driving and retains data that can be useful for future purposes.

The Computer Vision market size stands at $9.45 billion and is expected to reach $41.1 billion by 2030 as per the report by Allied market research. Global demand for autonomous vehicles is growing. It is expected that by 2030, nearly 12% to 17% of total vehicle sales will belong to the autonomous vehicle segment. OEMs across the globe are seizing this opportunity and making huge investments in ADAS, Computer Vision, and connected car systems.

Computer Vision with Sensor

How does Computer Vision enable Autonomous Vehicles?

Object Detection and Classification

It helps in identifying both stationary as well as moving objects on the road like vehicles, traffic lights, pedestrians, and more. For the avoidance of collisions, while driving, the vehicles continuously need to identify various objects. Computer Vision uses sensors and cameras to collect entire views and make 3D maps. This makes it easy for object identification, avoiding collision, and makes it safe for passengers.

Information Gathering for Training Algorithms

Computer Vision technology makes use of cameras and sensors to gather large sets of data inducing type of location, traffic and road conditions, number of people, and more. This helps in quick decision-making and assists autonomous vehicles to make use of situational awareness. This data can be further used in training the deep learning model to enhance performance.

Low-Light Mode with Computer Vision

The complexity of driving in a low light mode is much different than driving in daylight mode as images captured in a low light mode are often blurry and unclear which makes driving unsafe. With Computer Vision vehicles can detect low light conditions and make use of LIDAR sensors, HDR sensors, and thermal cameras to create high-quality images and videos. This improves safety for night driving.

Vehicle Tracking and Lane Detection

Cutting lanes can become a daunting task in the case of autonomous vehicles. Computer Vision with assistance from deep learning can use segmentation techniques to identify lanes on-road and continue in the stipulated lane. For tracking and understanding behavioral patterns of a vehicle, Computer Vision uses bounding box algorithms to assess its position.

Assisted Parking

The development in deep learning with convolutional neural networks (CNN) has drastically improved the accuracy level of object detection. With the help of outward-facing cameras, 3D reconstruction, parking slot marking recognition makes it is quite easy for autonomous vehicles to park in congested spaces, thereby eliminating wastage of time and effort. Also, IoT-enabled smart parking systems determine the occupancy of the parking lot and send a notification to the connected vehicles nearby.

Insights to Driver Behaviour

With the use of inward-facing cameras, Computer Vision can monitor driver’s gestures, eye movement, drowsiness, speedometer, phone usage, etc. which have a direct impact on road accidents and passengers’ safety. Monitoring all the parameters and giving timely alerts to drivers, avoids fatal road incidents and augments safety. Especially in the case of logistics & fleet companies, the vision system can identify and provide real-time data for the improvement of driver performance for maximizing their business.

The application of vision solutions into automotive is gaining immense popularity. With the inception of deep learning algorithms such as route planning, object detection, and decision making driven by powerful GPUs along with technologies ranging from SAR/thermal camera hardware, LIDR & HDR sensors, radars, it is becoming simpler to execute the concept of autonomous driving.

At MosChip, we help automotive businesses to design Computer Vision-based solutions such as automatic parallel parking, traffic sign recognition, object/lane detection, driver attention system, etc. involving FPGAs, CPUs, and Microcontrollers. Our team of experts has experience working with autonomous driving platforms, functions, middleware, and compliances like adaptive AUTOSAR, FuSa (ISO 26262), and MISRA C. We support our clients in the entire journey of intelligent automotive solution design.

About MosChip:

MosChip has 20+ years of experience in Semiconductor, Embedded Systems & Software Design, security, and Product Engineering services with the strength of 1300+ engineers.

Established in 1999, MosChip has development centers in Hyderabad, Bangalore, Pune, and Ahmedabad (India) and a branch office in Santa Clara, USA. Our embedded expertise involves platform enablement (FPGA/ ASIC/ SoC/ processors), firmware and driver development, embedded systems security, BSP and board bring-up, OS porting, middleware integration, product re-engineering and sustenance, device and embedded testing, test automation, IoT, AIML solution design and more. Our semiconductor offerings involve silicon design, verification, validation, and turnkey ASIC services. We are also a TSMC DCA (Design Center Alliance) Partner.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube