An overview of Embedded Machine Learning techniques and their associated benefits

Owing to revolutionary developments in computer architecture and ground-breaking advances in AI & machine learning applications, embedded systems technology is going through a transformational period. By design, machine learning models use a lot of resources and demand a powerful computer infrastructure. They are therefore typically run-on devices with more resources, like PCs or cloud servers, where data processing is efficient. Machine learning applications, ML frameworks, and processor computing capacity may now be deployed directly on embedded devices, thanks to recent developments in machine learning, and advanced algorithms. This is referred to as Embedded Machine Learning (E-ML).

The processing is moved closer to the edge, where the sensors collect data, using embedded machine learning techniques. This aids in removing obstacles like bandwidth and connection problems, security breaches by data transfer via the internet, and data transmission power usage. Additionally, it supports the use of neural networks and other machine learning frameworks, as well as signal processing services, model construction, gesture recognition, etc. Between 2021 to 2026, the global market for embedded AI is anticipated to expand at a 5.4 percent CAGR and reach about USD 38.87 billion, as per the maximize market research group reports.

The Underlying Concept of Embedded Machine Learning

Today, embedded computing systems are quickly spreading into every sphere of the human venture, finding practical use in things starting from wearable health monitoring systems, networked systems found on the internet of things (IoT), smart appliances for home automation to antilock braking systems in automobiles. The Common ML techniques used for embedded platforms include SVMs (Support Vector Machine), CNNs (convolutional neural network), DNNs (Deep Neural networks), k-NNs (K-Nearest Neighbour), and Naive Bayes. Large processing and memory resources are needed for efficient training and inference using these techniques. Even with deep cache memory structures, multicore improvements, etc., general-purpose CPUs are unable to handle the high computational demands of deep learning models. The constraints can be overcome by utilizing resources such as GPU and TPU processors. This is mainly because sophisticated linear algebraic computations, such as matrix and vector operations, are a component of non-trivial deep learning applications. Deep learning algorithms can be run very effectively and quickly on GPUs and TPUs, which makes them ideal computing platforms.

Running machine learning models on embedded hardware is referred to as embedded machine learning. The latter works according to the following fundamental precept: While model execution and inference processes take place on embedded devices, the training of ML models like neural networks takes place on computing clusters or in the cloud. Contrary to popular belief, it turns out that deep learning matrix operations can be effectively carried out on hardware with constrained CPU capabilities or even on tiny 16-bit/32-bit microcontrollers.

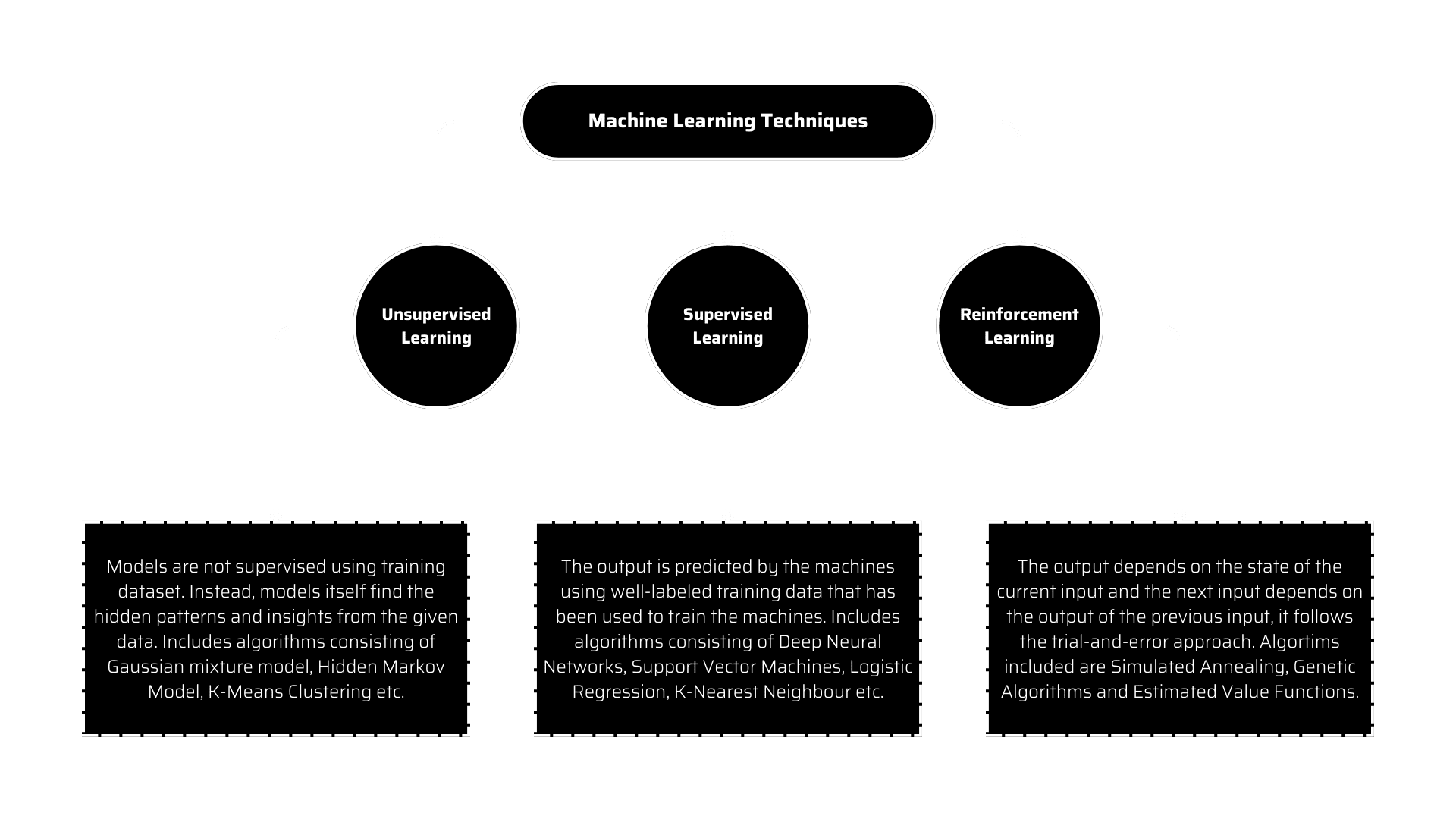

The type of embedded machine learning that uses extremely small pieces of hardware, such as ultra-low-power microcontrollers, to run ML models is called TinyML.Machine Learning approaches can be divided into three main categories: reinforcement learning, unsupervised learning, and supervised learning. In supervised learning, labelled data can be learned; in unsupervised learning, hidden patterns in unlabelled data can be found; and in reinforcement learning, a system can learn from its immediate environment by a trial-and-error approach. The learning process is known as the model’s “training phase,” and it is frequently carried out utilizing computer architectures with plenty of processing power, like several GPUs. The trained model is then applied to new data to make intelligent decisions after learning. The inference phase of the implementation is what is referred to as this procedure. IoT and mobile computing devices, as well as other user devices with limited processing resources, are frequently meant to do the inference.

Machine Learning Techniques

Application Areas of Embedded Machine Learning

Intelligent Sensor Systems

The effective application of machine learning techniques within embedded sensor network systems is generating considerable interest. Numerous machine learning algorithms, including GMMs (Gaussian mixture model), SVMs, and DNNs, are finding practical uses in important fields such as mobile ad hoc networks, intelligent wearable systems, and intelligent sensor networks.

Heterogeneous Computing Systems

Computer systems containing multiple types of processing cores are referred to as heterogeneous computing systems. Most heterogeneous computing systems are employed as acceleration units to shift computationally demanding tasks away from the CPU and speed up the system. Heterogeneous Multicore Architecture is an area of application where to speed up computationally expensive machine learning techniques, the middleware platform integrates a GPU accelerator into an already-existing CPU-based architecture thereby enhancing the processing efficiency of ML data model sets.

Embedded FPGAs

Due to their low cost, great performance, energy economy, and flexibility, FPGAs are becoming increasingly popular in the computing industry. They are frequently used to pre-implement ASIC architectures and design acceleration units. CNN Optimization using FPGAs and OpenCL-based FPGA Hardware Acceleration are the areas of application where FPGA architectures are used to speed up the execution of machine learning models.

Benefits

Efficient Network Bandwidth and Power Consumption

Machine learning models running on embedded hardware make it possible to extract features and insights directly from the data source. As a result, there is no longer any need to transport relevant data to edge or cloud servers, saving bandwidth and system resources. Microcontrollers are among the many power-efficient embedded systems that may function for long durations without being charged. In contrast to machine learning application that is carried out on mobile computing systems which consumes a substantial amount of power, TinyML can increase the power autonomy of machine learning applications to a greater extent for embedded platforms.

Comprehensive Privacy

Embedded machine learning eliminates the need for data transfer and storage of data on cloud servers. This lessens the likelihood of data breaches and privacy leaks, which is crucial for applications that handle sensitive data such as personal information about individuals, medical data, information about intellectual property (IP), and classified information.

Low Latency

Embedded ML supports low-latency operations as it eliminates the requirement of extensive data transfers to the cloud. As a result, when it comes to enabling real-time use cases like field actuating and controlling in various industrial scenarios, embedded machine learning is a great option.

Embedded machine learning applications are built using methods and tools that make it possible to create and deploy machine learning models on nodes with limited resources. They offer a plethora of innovative opportunities for businesses looking to maximize the value of their data. It also aids in the optimization of the bandwidth, space, and latencies of their machine learning applications.

MosChip AI/ML experts have extensive expertise in creating efficient ML solutions for a variety of edge platforms, including CPUs, GPUs, TPUs, and neural network compilers. We also offer secure embedded systems development and FPGA design services by combining the best design methodologies with the appropriate technology stacks. We help businesses in building high-performance cloud and edge-based ML solutions like object/lane detection, face/gesture recognition, human counting, key-phrase/voice command detection, and more across various platforms.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube