Developing TPU based AI solutions using TensorFlow Lite

AI has become ubiquitous today from personal devices to enterprise applications, you see them everywhere. The advent of IoT clubbed with rising demand for data privacy, low power, low latency, and bandwidth constraints has increasingly pushed for AI models to be running at the edge instead of the cloud. According to Grand View Research, the global edge artificial intelligence chips market was valued at USD 1.8 billion in 2019 and is expected to grow at a CAGR of 21.3 percent from 2020 to 2027. On this onset, Google introduced Edge TPU, also known as Coral TPU, which is its purpose-built ASIC for running AI at edge. It’s designed to give an excellent performance while taking up minimal space and power. When we train an AI model, we end up with AI models that have high storage requirements and GPU processing power. We cannot execute them on devices that have low memory and processing footprints. TensorFlow Lite is useful in this situation. TensorFlow Lite is an open-source deep learning framework that runs on the Edge TPU and allows for on-device inference and AI model execution. Also note that TensorFlow Lite is only for executing inference on the edge, not for training a model. For training an AI model, we must use TensorFlow.

Combining Edge TPU and TensorFlow Lite

When we talk about deploying an AI model on Edge TPU, we just cannot deploy any AI model.

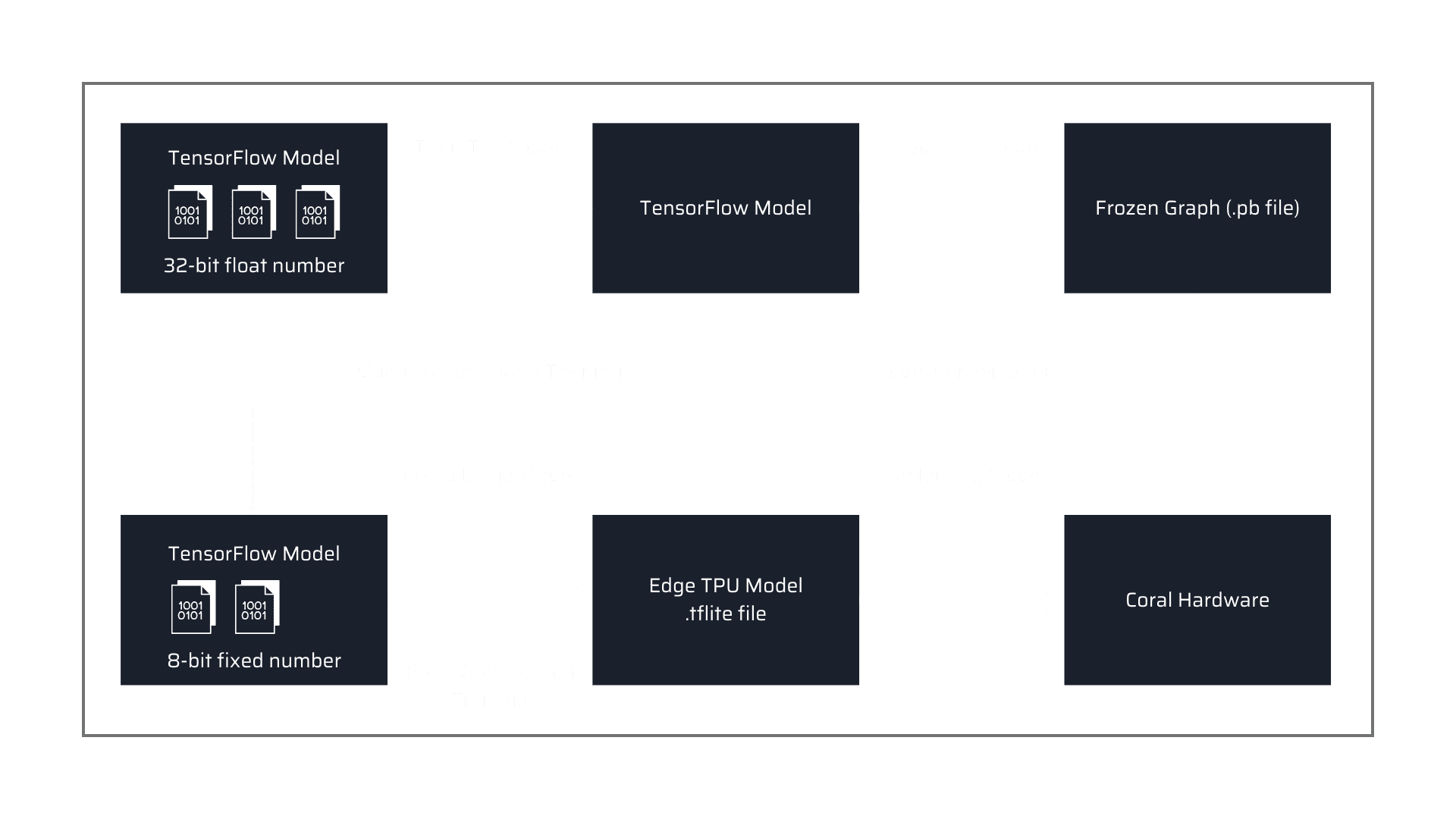

The Edge TPU supports NN (Neural Network) operations and designs to enable high-speed neural network performance with low power consumption. Apart from specific networks, it only supports 8-bit quantized and compiled TensorFlow Lite models for Edge TPU.

For a quick summary, TensorFlow Lite is a lightweight version of TensorFlow specially designed for mobile and embedded devices. It achieves low latency results with a small storage size. There is a TensorFlow Lite converter that allows converting a TensorFlow-based AI model file (. pb) to a TensorFlow Lite file (.tflite). Below is a standard workflow for deploying applications on Edge TPU

Let’s look at some interesting real-world applications that can be built using TensorFlow Lite on edge TPU.

Human Detection and Counting

This solution has so many practical applications, especially in malls, retail, government offices, banks, and enterprises. One may wonder what one can do with detecting and counting humans. Data now has the value of time and money. Let us see how the insights from human detection and counting can be used.

Estimating Footfalls

For the retail industry, this is important as it gives an idea if their stores are doing well. Whether their displays are attracting customers to enter the shops. It also helps them to know if they need to increase or decrease support staff. For other organizations, they help in taking adequate security measures for people.

Crowd Analytics and Queue Management

For govt offices and enterprises, queue management via human detection and counting helps them manage longer queues and save people’s time. Studying queues can attribute to individual and organizations’ performance. Crowd detection can help analyze crowd alerts for emergencies, security incidents, etc., and take appropriate actions. Such solutions give the best results when deployed on edge, as required actions can be taken close to real-time.

Age and Gender-based Targeted Advertisements

This solution mainly has practical applications in the retail and advertisement industry. Imagine you walking towards the advertisement display which was showing a women’s shoe ad and then suddenly the advertisement changes to a male’s shoe ad as it determined you being male. Targeted advertisements help retailers and manufacturers target their products better and create brand awareness that a normal person would never get to see in his busy life.

This cannot be restricted to only advertisements, age and gender detection can also help businesses in taking quick decisions by managing appropriate support staff in retail stores, what age and gender people prefer visiting your store, businesses, etc. All this is more powerful and effective if you are very quick to determine and act. So, even more, a reason to have this solution on Edge TPU.

Face Recognition

The very first face recognition system was built in 1970, and to date this is still being developed, being made more robust and effective. The main advantage of having face recognition on edge is real-time recognition. Another advantage is having face encryption and feature extraction on edge, and just sending encrypted and extracted data to the cloud for matching, thereby protecting PII level privacy of face images (as you don’t save face images on edge and cloud) and complying with stringent privacy laws.

Edge TPU combined with the TensorFlow Lite framework opens several edges AI applications opportunities. As the framework is open-source the Open-Source Software (OSS) community also supports it, making it even more popular for machine learning use cases. The overall platform of TensorFlow Lite enhances the environment for the growth of edge applications for embedded and IoT devices.

At MosChip, we provide AI engineering and machine learning services and solutions with expertise on edge platforms (TPU, Rpi, FPGA), NN compiler for the edge, cloud platforms accelerators like AWS, Azure, AMD, and tools like TensorFlow, TensorFlow Lite, Docker, GIT, AWS DeepLens, Jetpack SDK, and many more targeted for domains like Automotive, Multimedia, Industrial IoT, Healthcare, Consumer, and Security-Surveillance. MosChip helps businesses in building high-performance cloud and edge-based ML solutions like object/lane detection, face/gesture recognition, human counting, key-phrase/voice command detection, and more across various platforms.

About MosChip:

MosChip has 20+ years of experience in Semiconductor, Embedded Systems & Software Design, and Product Engineering services with the strength of 1300+ engineers.

Established in 1999, MosChip has development centers in Hyderabad, Bangalore, Pune, and Ahmedabad (India) and a branch office in Santa Clara, USA. Our embedded expertise involves platform enablement (FPGA/ ASIC/ SoC/ processors), firmware and driver development, BSP and board bring-up, OS porting, middleware integration, product re-engineering and sustenance, device and embedded testing, test automation, IoT, AIML solution design and more. Our semiconductor offerings involve silicon design, verification, validation, and turnkey ASIC services. We are also a TSMC DCA (Design Center Alliance) Partner.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube