The Rise of Containerized Application for Accelerated AI Solutions

At the end of 2021, the artificial intelligence market was estimated to be a value of $58.3 billion. This figure is bound to increase and is estimated to grow tenfold over the next 5 years and reach $309.6 billion by 2026. Given such popularity of AI technology, companies extensively want to build and deploy solutions with AI applications for their businesses. In today’s technology-driven world AI has become an integral part of our life. As per a report by McKinsey, AI adoption is continuing its steady rise: 56% of all respondents report AI adoption in at least one business function, up from 50% in 2020. This increase in adoption is due to evolving strategies for building and deploying AI applications. Various strategies are evolving to build and deploy AI models. Container applications are one such strategy.

MLOps are becoming increasingly stable. If you are unfamiliar with Machine Learning operations, then it is a collection of principles, practices, and technologies that help to increase the efficiency of machine learning workflows. It is based on DevOps, and just as DevOps has streamlined the SDLC from development to deployment, MLOps accomplish the same for machine learning applications. Containerization is one of the most intriguing and emerging technologies for developing and delivering AI applications. A container is a standard unit of software packaging that encapsulates code and all its dependencies in a single package, allowing programs to move from one computing environment to another rapidly and reliably. Docker is at the forefront of application containerization.

What Are Containers?

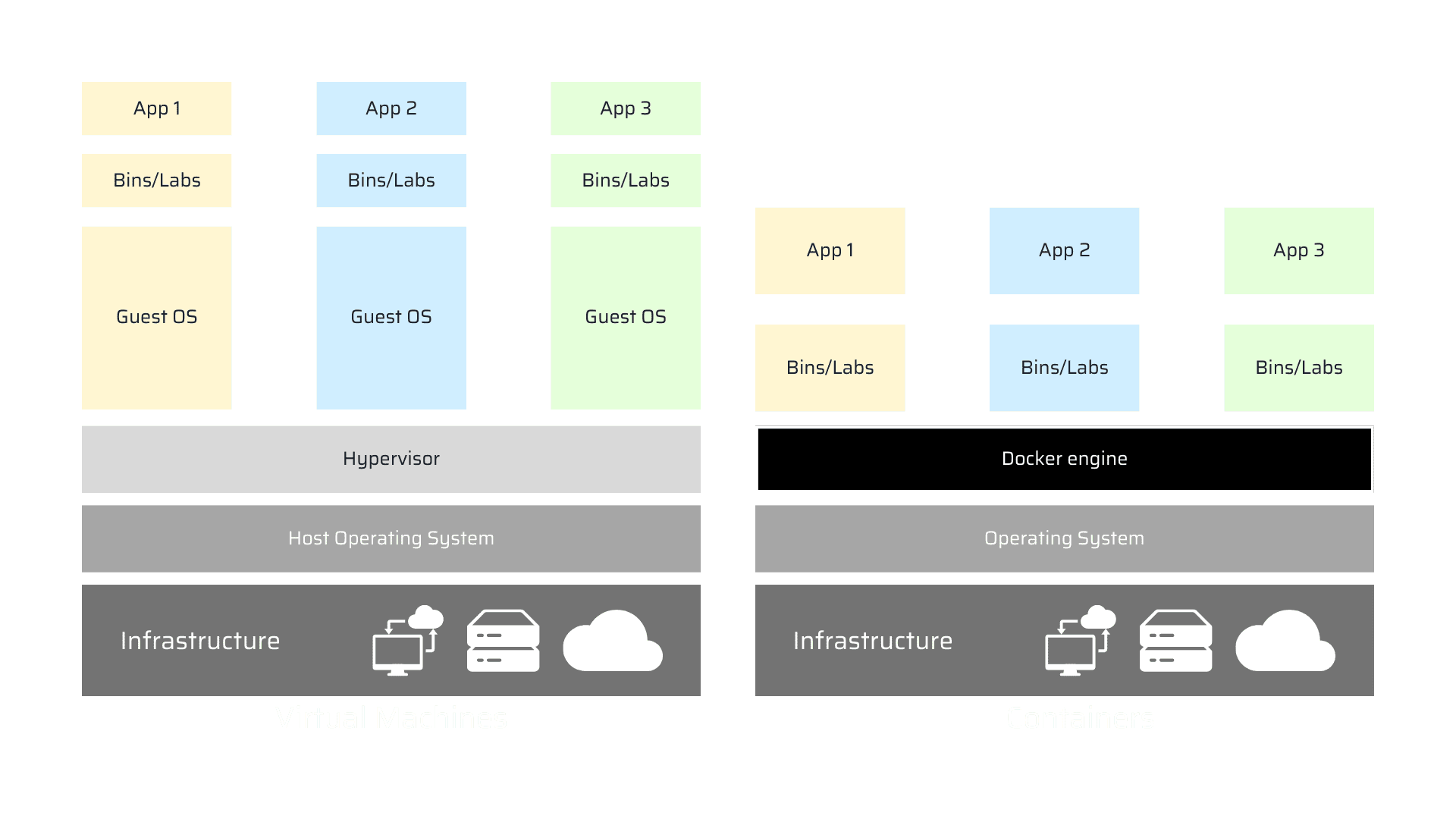

Containers are logical boxes that contain everything an application requires to execute. The operating system, application code, runtime, system tools, system libraries, binaries, and other components are all included in this software bundle. Optionally, some dependencies might be included or excluded based on the availability of specific hardware. These containers run directly within the host machine kernels. The container will share the host machine’s resources (like CPU, disks, memory, etc.) and eliminate the extra load of a hypervisor. This is the reason why containers are “lightweight “.

Why Are Containers So Popular?

- First, they are lightweight since the container shares the machine operating system kernels. It doesn’t need an entire operating system in place to run the application. VirtualBox, popularly known as VM’s, require installation of complete OS making them quite bulky.

- Containers are portable and can easily be transported from one machine to another machine with all the required dependencies within it. They enable developers and operators to improve CPU and memory utilization of physical machines.

- Among container technology, Docker is the most popular and widely used platform. Not only the Linux-powered Red Hat and Canonical have embraced Docker, but also companies like Microsoft, Amazon, and Oracle are relying on it. Today, almost all IT and cloud companies have adopted docker, and are widely used to provide their solution with all the dependencies.

Virtual Machines vs Containers

Is There Any Difference between Docker and Containers?

- Docker has widely become a synonym for containers because it is open-source, has a huge community base, and is a quite stable platform. But container technology isn’t new, it has been incorporated into Linux in the form of LXC for more than 10 years, and similar operating-system-level virtualization has also been offered by FreeBSD jails, AIX Workload Partitions, and Solaris Containers.

- Dockers can make the process easier by merging OS and package needs into a single package, which is one of the differences between containers and dockers.

- We’re often perplexed as to why docker is employed in the field of data science and artificial intelligence, yet it’s mostly used in DevOps. ML and AI, like DevOps, have inter-OS dependencies. As a result, a single code can run on Ubuntu, Windows, AWS, Azure, Google Cloud, ROS, a variety of edge devices, or anywhere else.

Container Application for AI / ML:

Like any software development, AI applications also face SDLC challenges when assembled and run by various developers in a team or in collaboration with multiple teams. Due to the constant iterative and experimental nature of AI applications, there comes a point where the dependencies might wind up crisscrossing, causing inconveniences for other dependent libraries in the same project.

To Explain:

The need for Container Application for AI / ML

The issues are true, and as a result, there is a requirement for acceptable documentation of each step to follow if you’re presenting a project that requires a specific method of execution. Imagine you have multiple python virtual environments for different models of the same projects, and without updated documentation, you may wonder what are these dependencies for? Why do I get conflicts while installing newer libraries or updated models etc.?

Developers constantly face this dilemma “It works on my machine” and constantly try resolving it.

Why it’s working on my machine

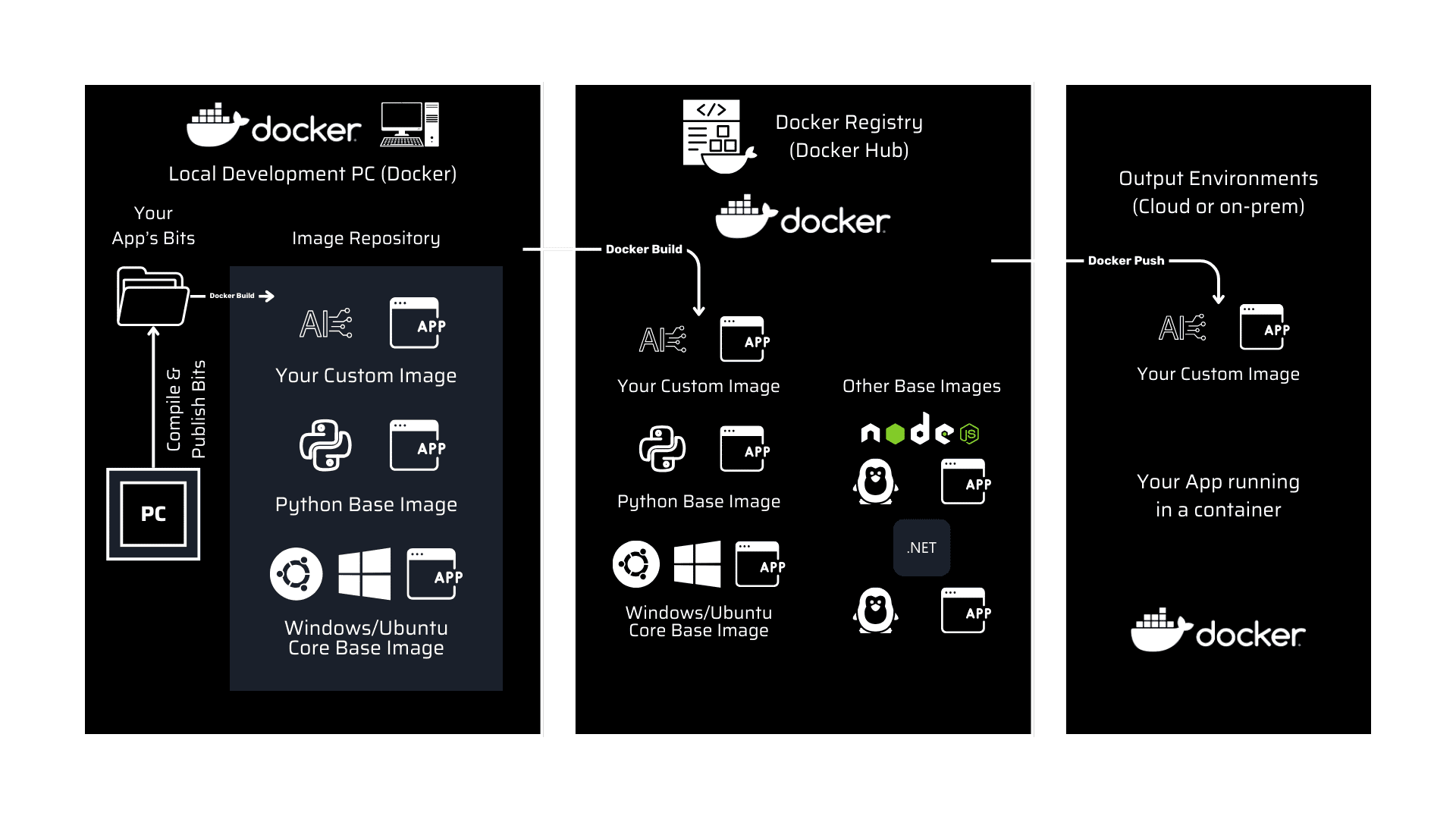

Using Docker, all of this can be made easier and faster. Containerization can help you save a lot of time updating documents and make the development and deployment of your program go more smoothly in the long term. Even by pulling multiple images which will be platform-agnostic, we can serve multiple AI models using docker containers.

The application written fully on the Linux platform can be run on the Windows platform using docker, which can be installed on a Windows workstation, making code deployment across platforms much easier.

Deployment of code using docker container

Benefits of Converting entire AI application development to deployment pipeline into a container:

- Separate containers for each AI model for different versions of frameworks, OS, and edge devices/ platforms.

- Having a container for each AI model for customization of deployments. Ex: One container is developer-friendly while another is user-friendly and requires no coding to use.

- Individual containers for each AI model for different releases or environments in the AI project (development team, QA team, UAT (User Acceptance Testing), etc.)

Container applications truly accelerate the AI application development-deployment pipeline more efficiently and help maintain and manage multiple models for multiple purposes.

About MosChip:

MosChip has 20+ years of experience in Semiconductor, Embedded Systems & Software Design, and Product Engineering services with the strength of 1300+ engineers.

Established in 1999, MosChip has development centers in Hyderabad, Bangalore, Pune, and Ahmedabad (India) and a branch office in Santa Clara, USA. Our embedded expertise involves platform enablement (FPGA/ ASIC/ SoC/ processors), firmware and driver development, BSP and board bring-up, OS porting, middleware integration, product re-engineering and sustenance, device and embedded testing, test automation, IoT, AIML solution design and more. Our semiconductor offerings involve silicon design, verification, validation, and turnkey ASIC services. We are also a TSMC DCA (Design Center Alliance) Partner.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube

Author

-

Kaushal is a Senior Developer at Softnautics, an AI enthusiast whose mission is to develop, tweak and deploy AI applications for edge devices. He also has vast experience in working with electronics and embedded system and seeks to deliver state-of-the-art AI solutions. He loves to travel into the nature and is passionate about aesthetic photography.