Inside HDR10: A technical exploration of High Dynamic Range

High Dynamic Range (HDR) technology has taken the world of visual entertainment, especially streaming media solutions, by storm. It’s the secret sauce that makes images and videos look incredibly lifelike and captivating. From the vibrant colors in your favourite movies to the dazzling graphics in video games, HDR has revolutionized how we perceive visuals on screens. In this blog, we’ll take you on a technical journey into the heart of HDR, focusing on one of its most popular formats – HDR10. “Breaking down all the complex technical details into simple terms, this blog aims to help readers understand how HDR10 seamlessly integrates into streaming media solutions, working its magic.

What is High Dynamic Range 10 (HDR10)?

HDR 10 is the most popular and widely used HDR standard for consuming digital content. Every TV that is HDR enabled is compatible with HDR10. In the context of video, it primarily provides a significantly enhanced visual experience compared to standard dynamic range (SDR).

Standard Dynamic Range:

People have experienced visuals through SDR for a long time, which has a limited dynamic range. This limitation means SDR cannot capture the full range of brightness and contrast perceivable by the human eye. One can consider it the ‘old way’ of watching movies and TV shows. However, the discovery of HDR has changed streaming media by offering a much wider dynamic range, resulting in visuals that were more vivid and lifelike.

Understanding the basic visual concepts

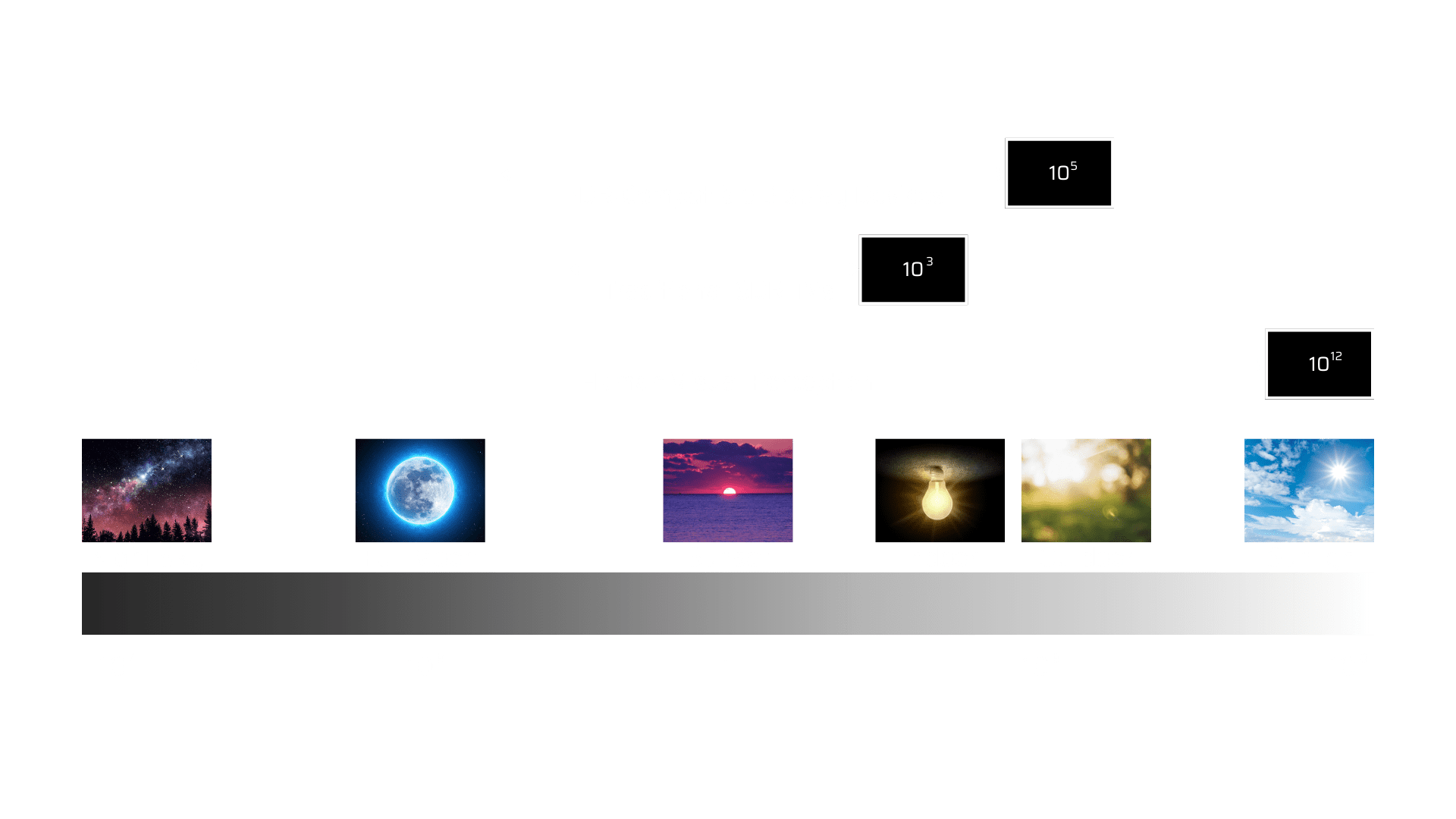

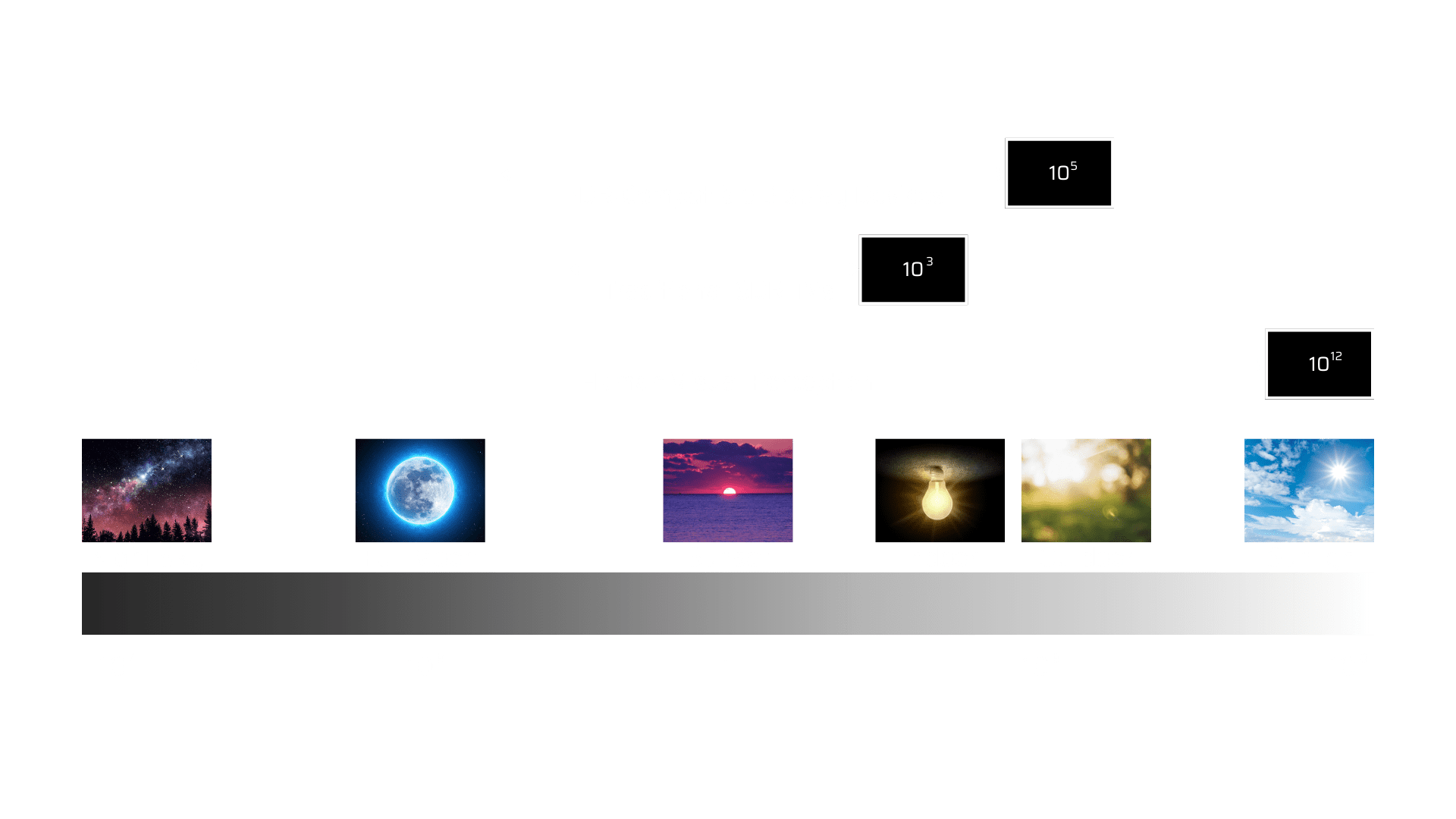

Luminance and brightness: Luminance plays a pivotal role in our perception of contrast and detail in image processing. Higher luminance levels result in objects appearing brighter and contribute to the creation of striking highlights and deep shadows in HDR content. Luminance, measured in units called “nits,” is a scientific measurement of brightness. In contrast, brightness, in the context of how we perceive it, is a subjective experience influenced by individual factors and environmental conditions. It is how we interpret the intensity of light emitted or reflected by an object. It can vary from person to person.

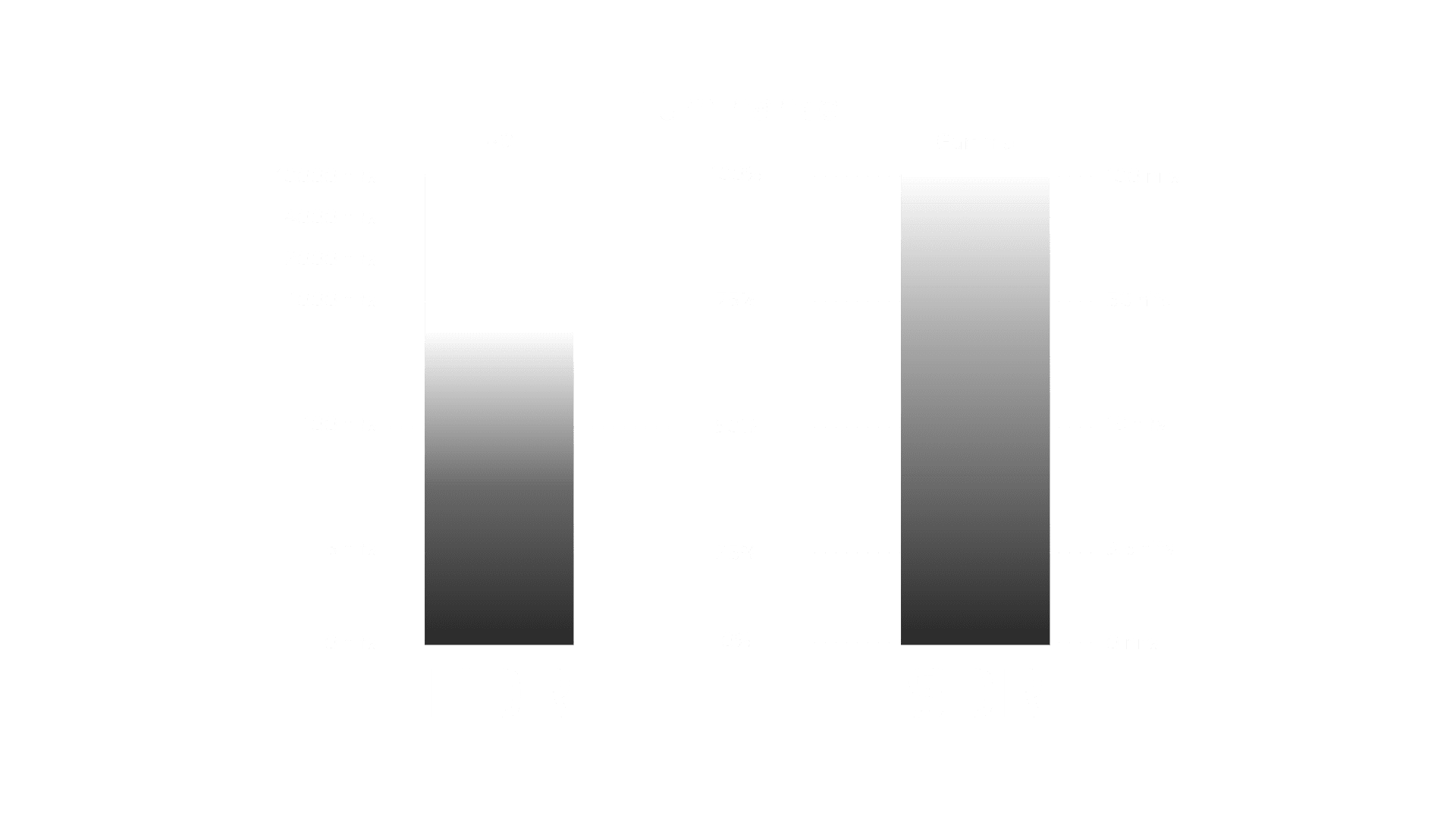

Luminance comparison between SDR and HDR

Luminance comparison between SDR and HDR

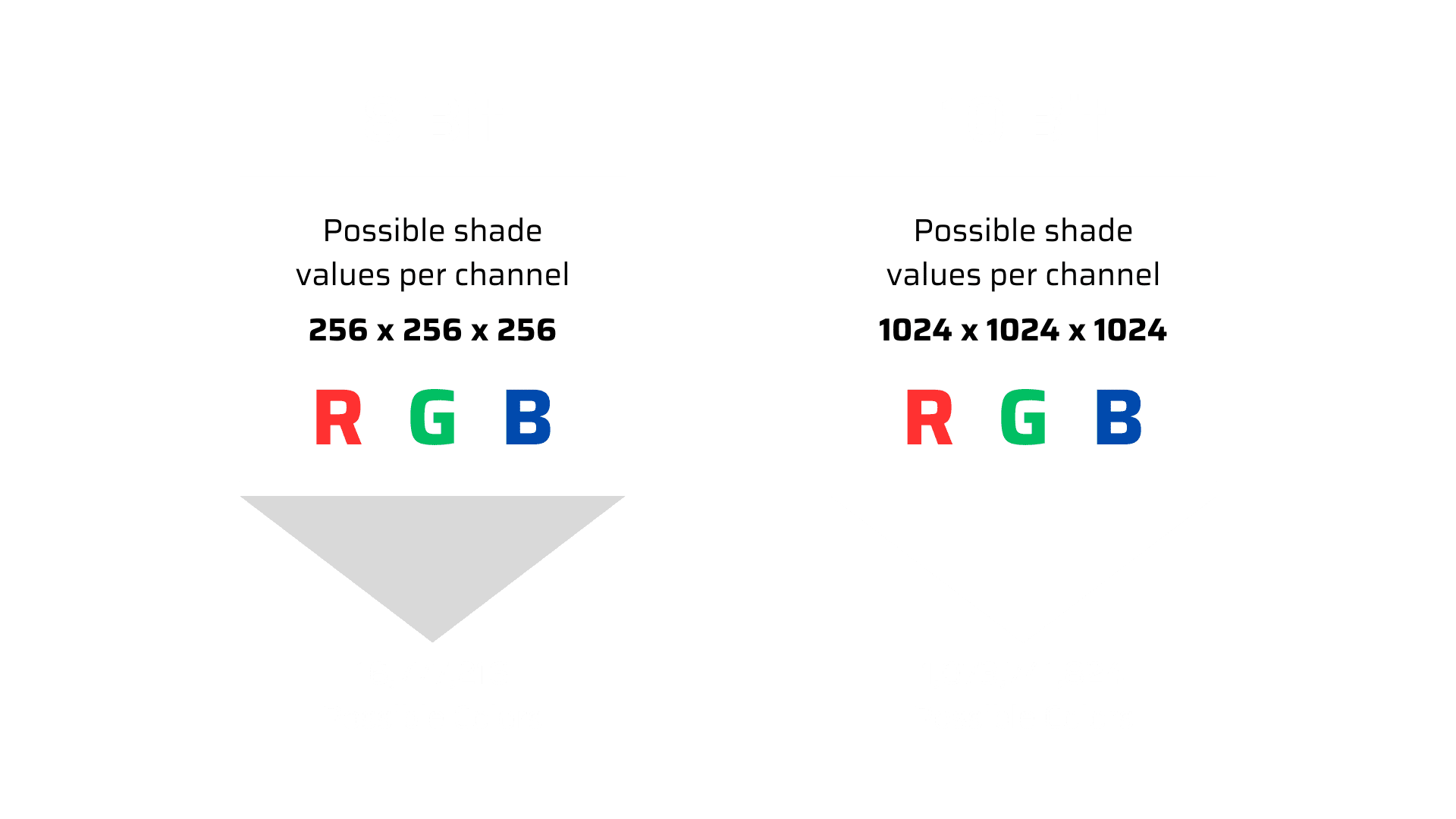

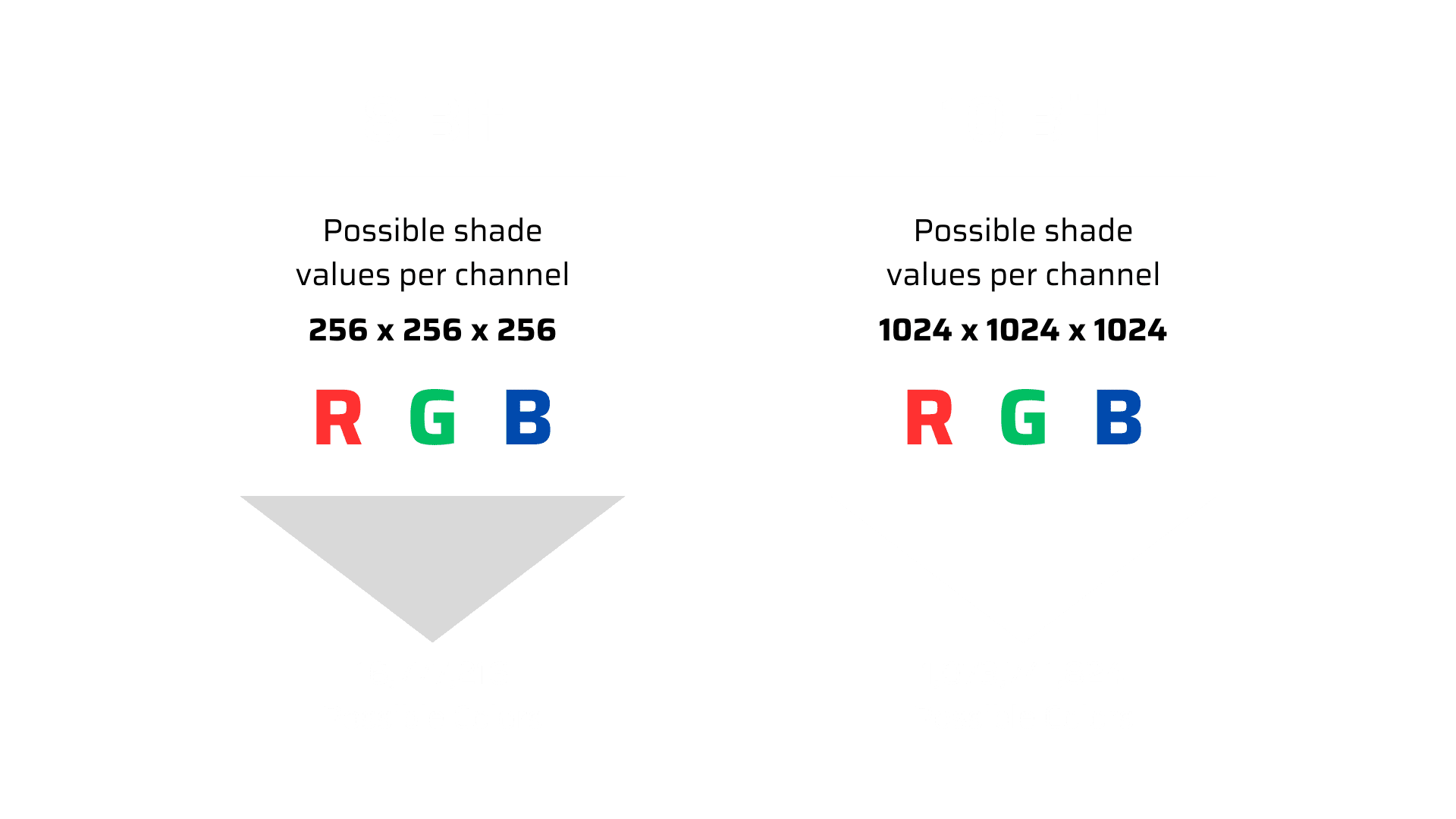

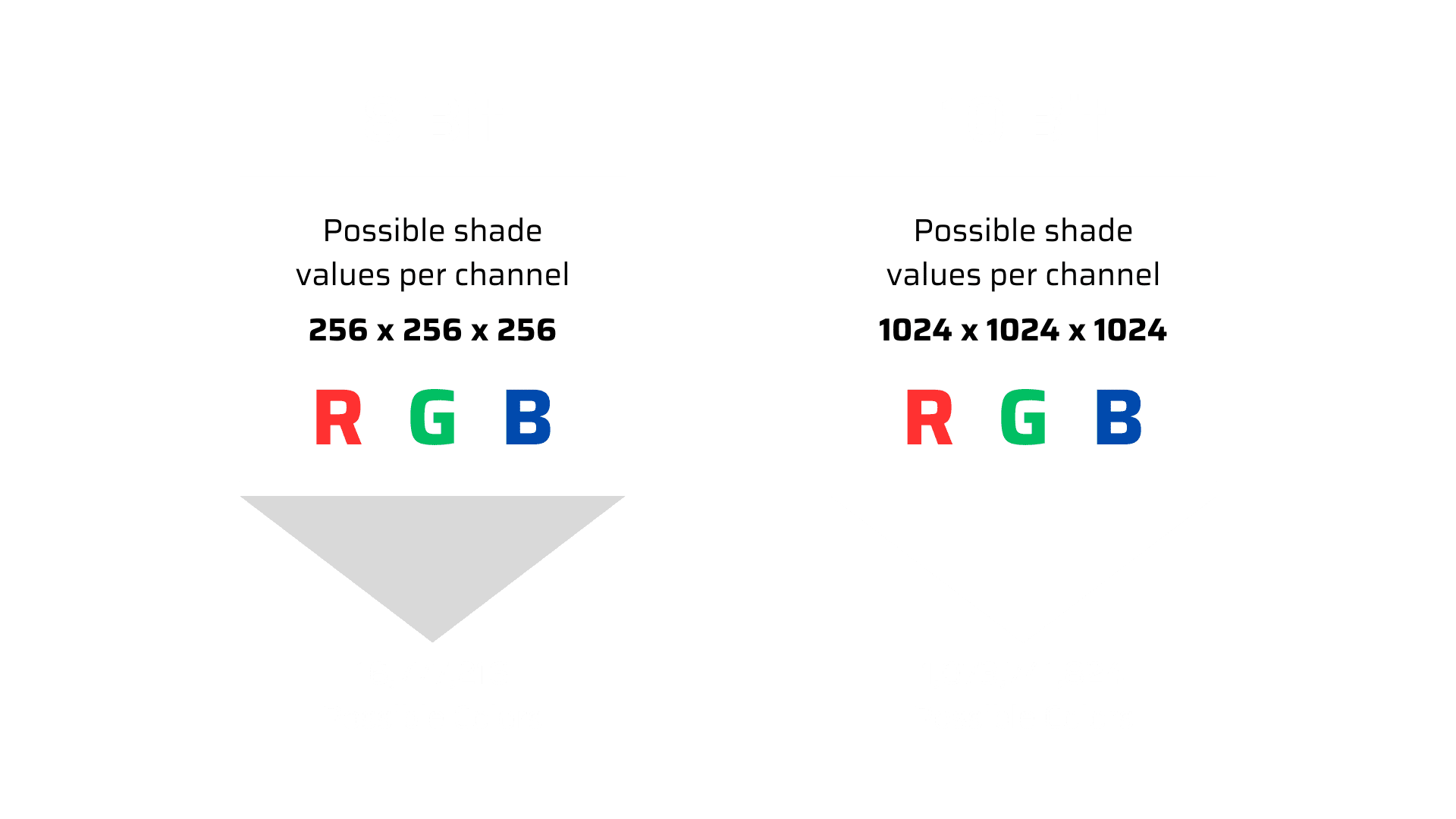

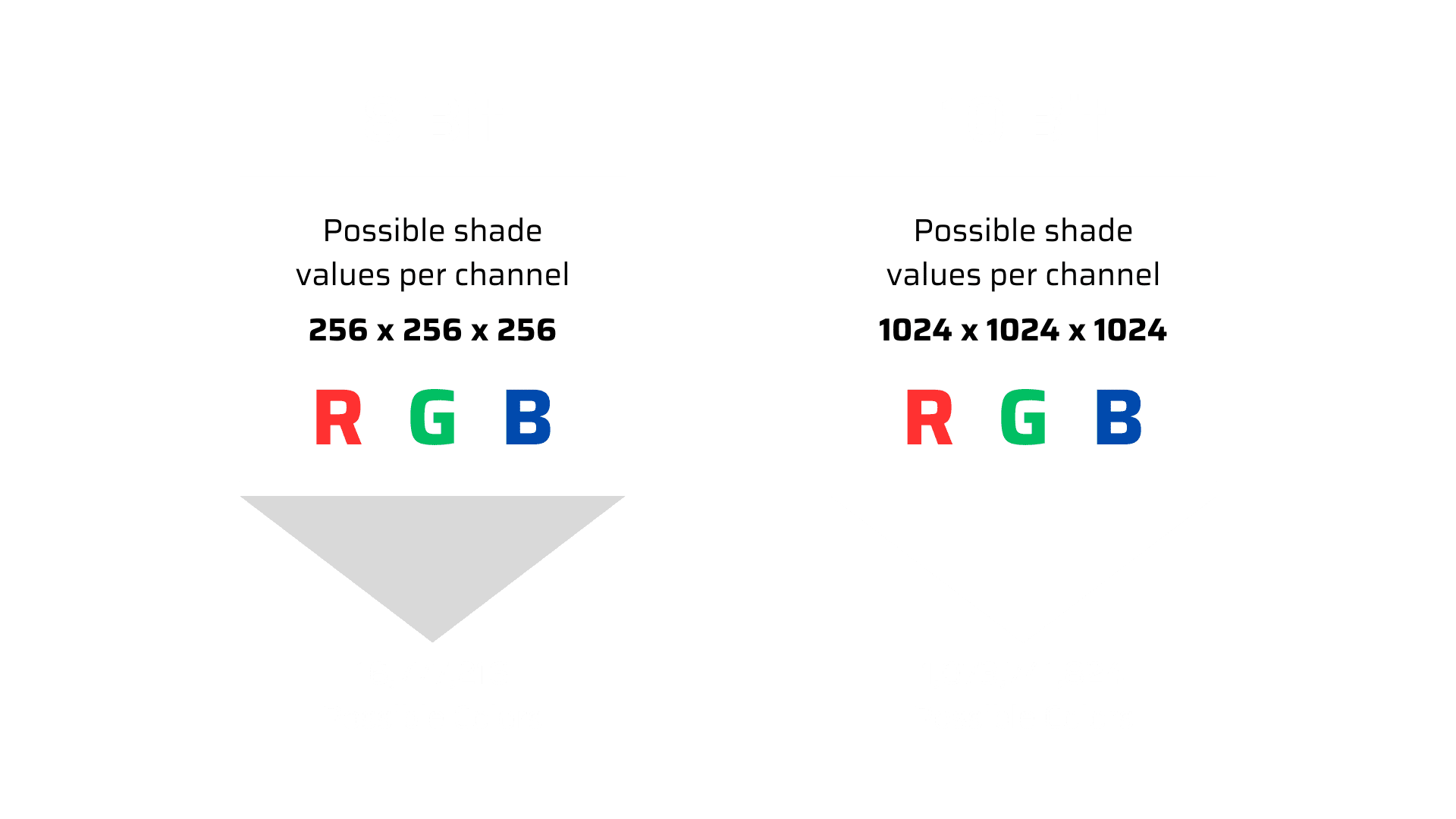

Color-depth (bit-depth): Color-depth, often referred to as bit-depth, is a fundamental concept in digital imaging and video solutions. It determines the richness and accuracy of colors in digital content. This metric is quantified in bits per channel, effectively dictating the number of distinct colors that can be accurately represented for each channel. Common bit depths include 8-bit, 10-bit, and 12-bit per channel. Higher bit depths allow for smoother color transitions and reduce color banding, making them crucial in applications like photography, video editing, and other video solutions. However, it’s important to note that higher color depths lead to larger file sizes due to the storage of more color information.

Possible colors with SDR and HDR Bit-depth

Possible colors with SDR and HDR Bit-depth

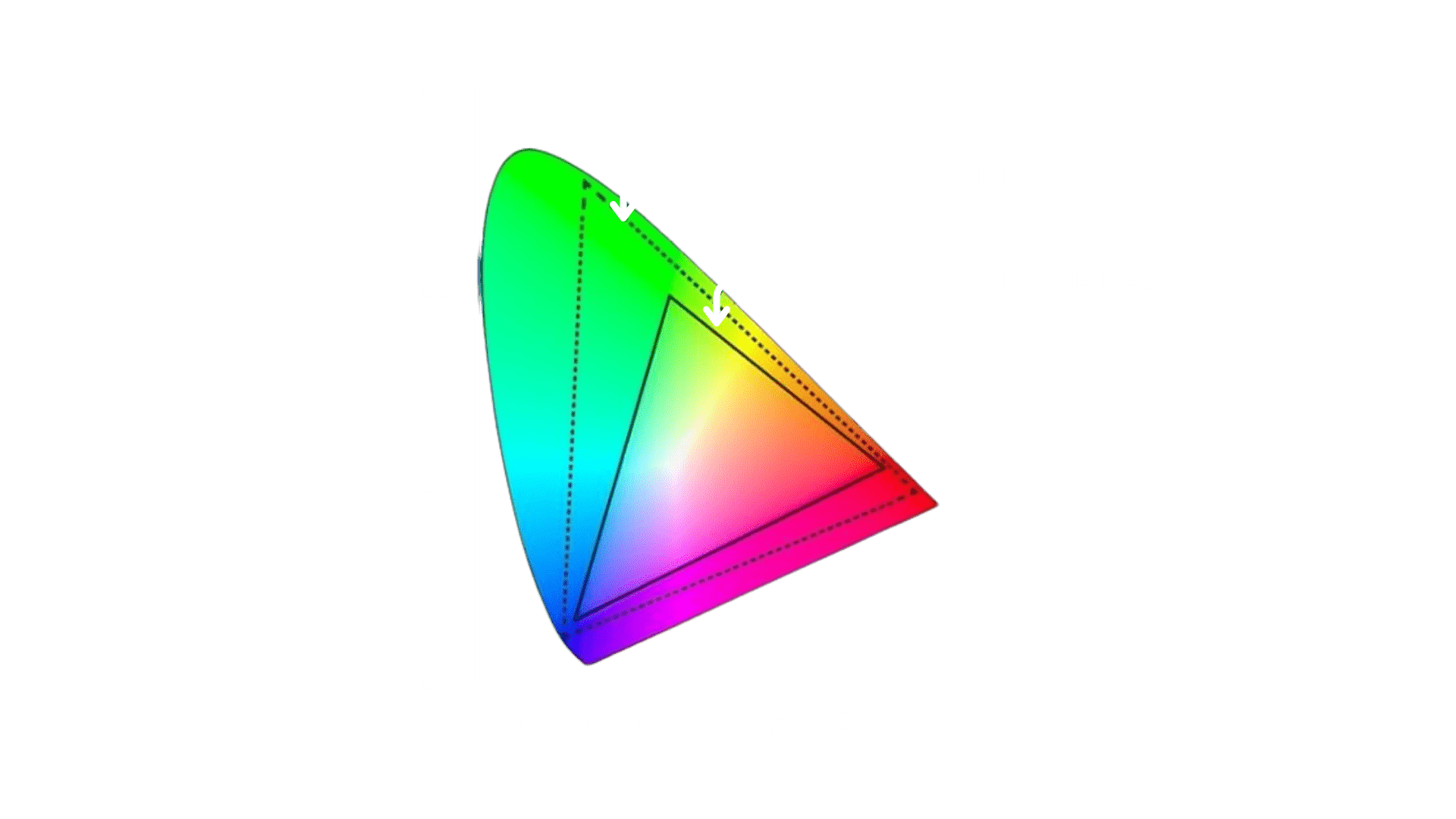

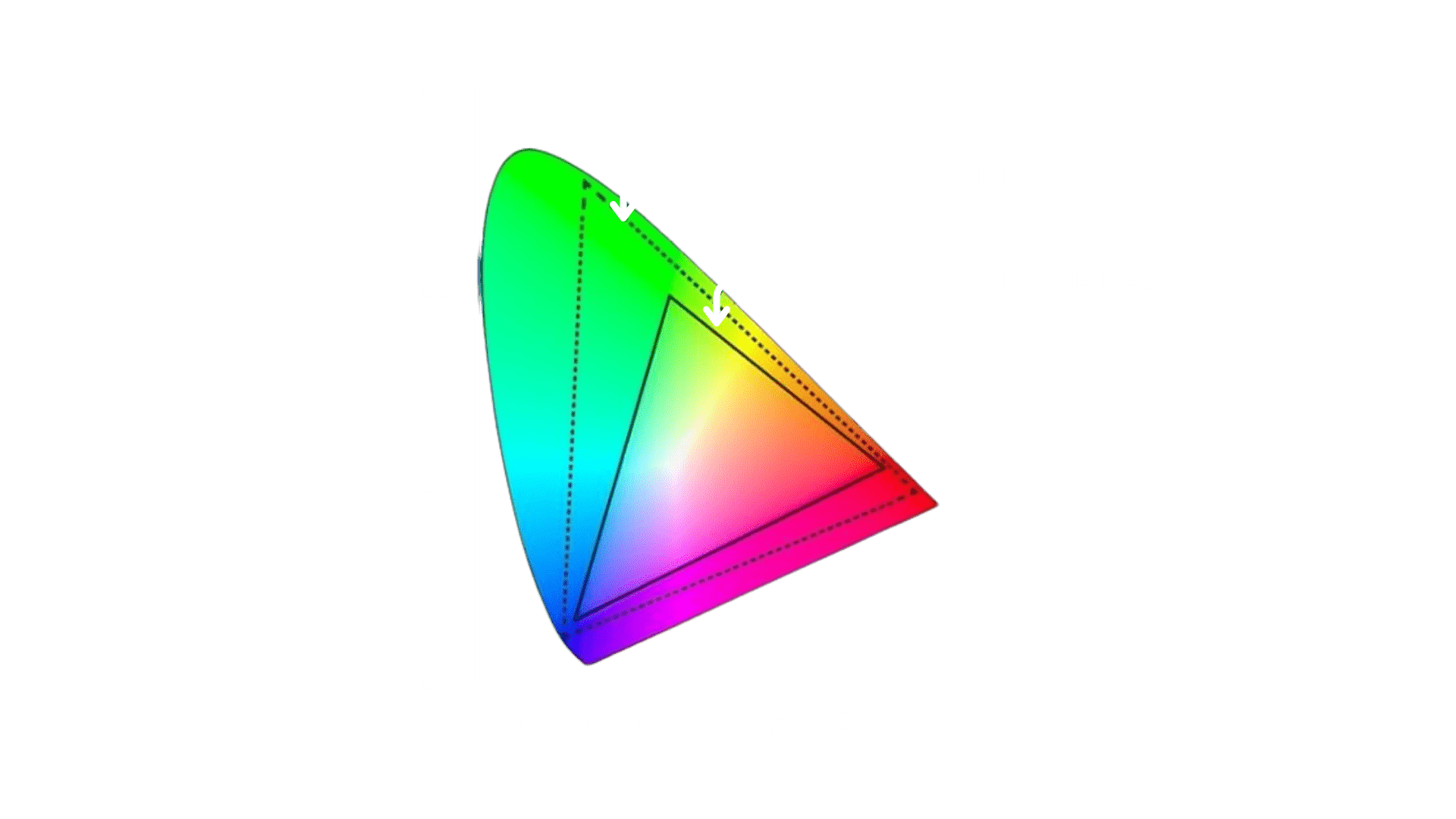

Color-space: Color-space is a pivotal concept in image processing, display technology, and video solutions. It defines a specific set of colors that can be accurately represented and manipulated within a digital system. This ensures consistency and accuracy in how colors are displayed, recorded, and interpreted across different devices and platforms. Technically, it describes how an array of pixel values should be displayed on a screen, including information about pixel value storage within a file, the range, and the meaning of those values. Color spaces are essential for faithfully reproducing a wide range of colors, from the deep blues to the rich red colors, resulting in visuals that are more vibrant and truer to life. A color space is akin to a palette of colors available for use and is defined by a range of color primaries represented as points within a three-dimensional color space diagram. These color primaries determine the spectrum of colors that can be created within that color space. A broader color gamut includes a wider range of colors, while a narrower one offers a more limited selection. Various color spaces are standardized to ensure compatibility across different devices and platforms.

CIE Chromaticity Diagram representing Rec.709 vs Rev2020

CIE Chromaticity Diagram representing Rec.709 vs Rev2020

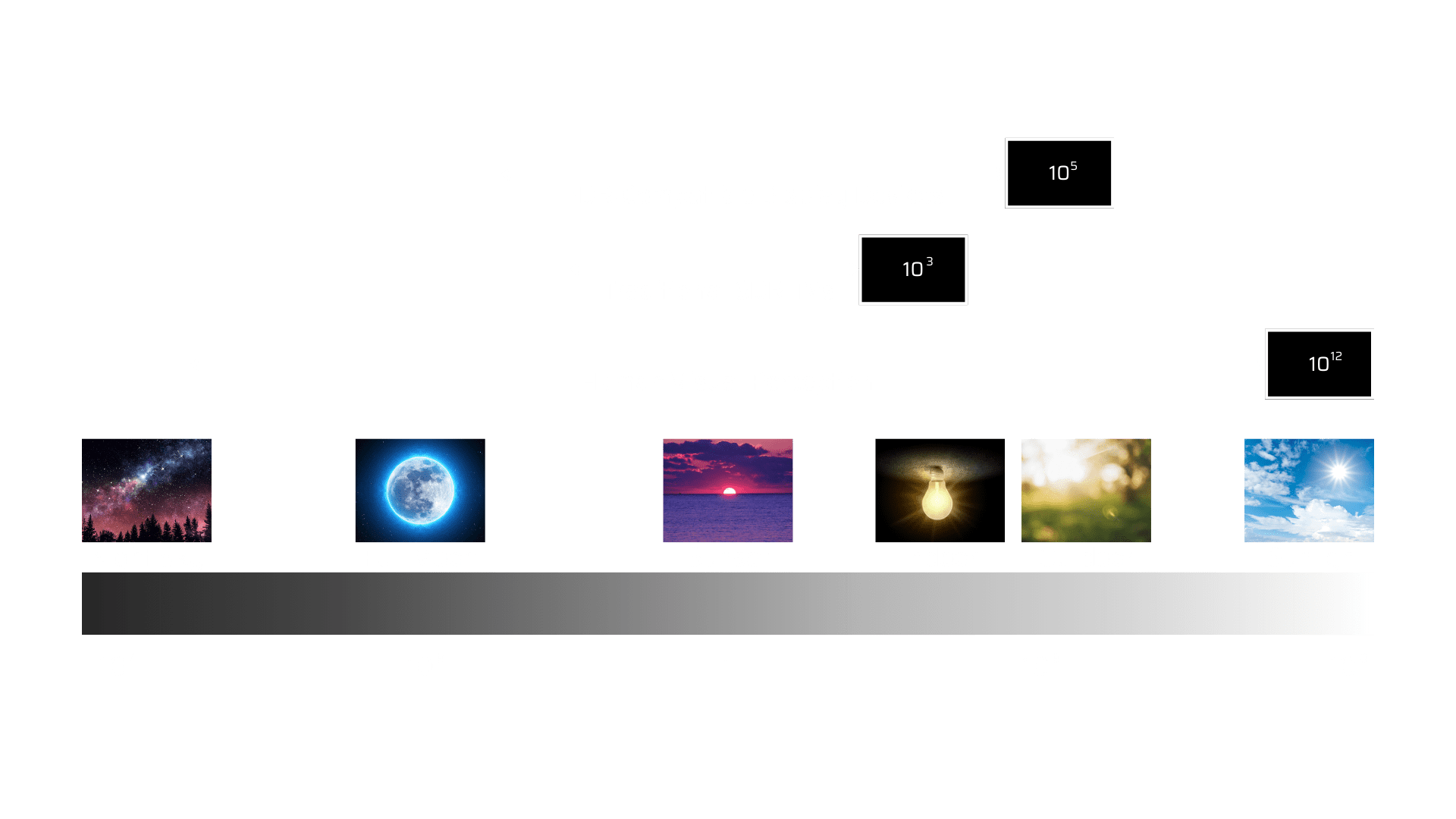

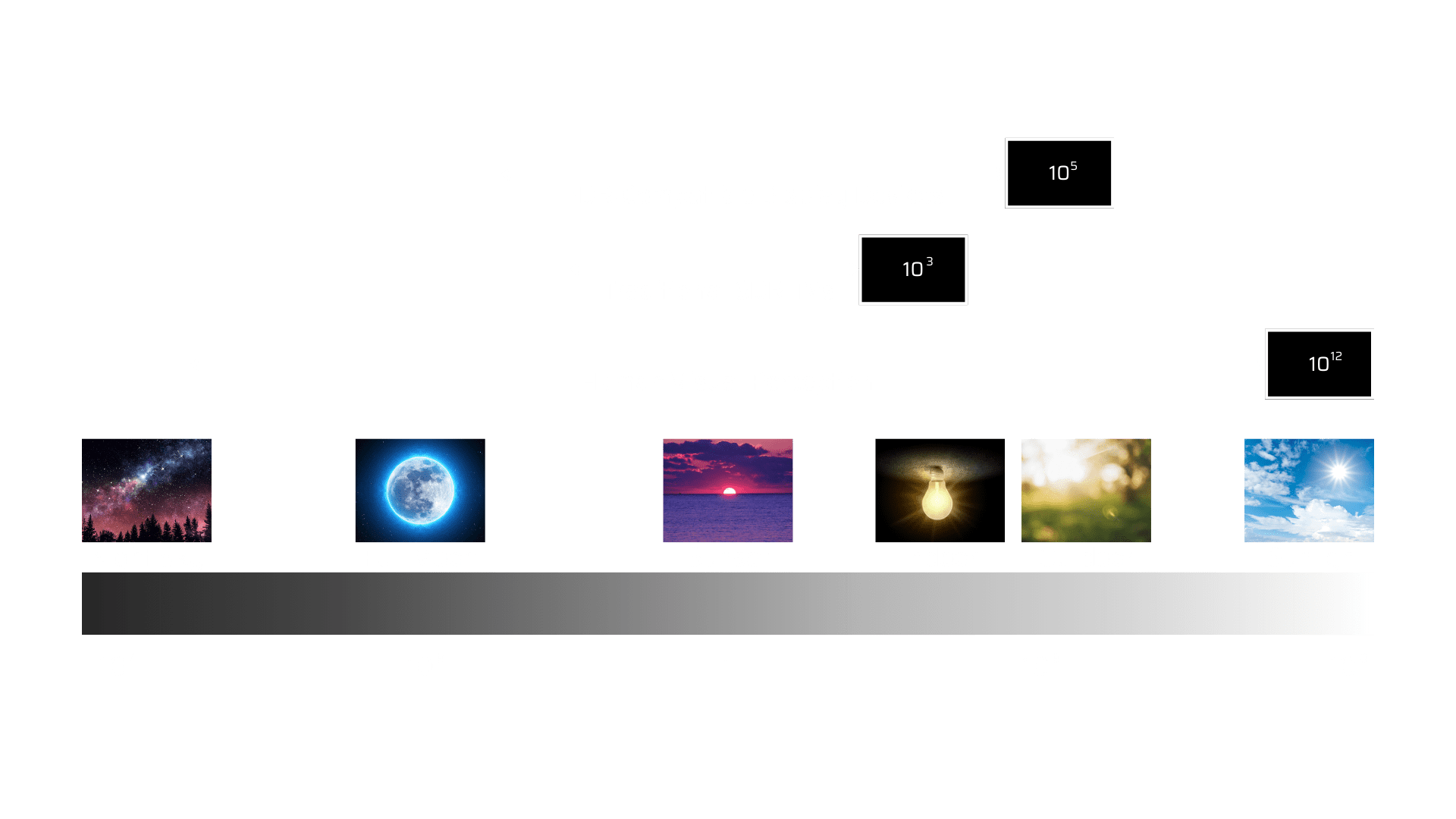

Dynamic range: Dynamic range relates to the contrast between the highest and lowest values that a specific quantity can include. This idea is commonly used in the domains of signals, which include sound and light. In the context of images, dynamic range determines how the brightest and darkest elements appear within a picture and the extent to which a camera or film can handle varying levels of light. Furthermore, it greatly affects how different aspects appear in a developed photograph, impacting the interplay between brightness and darkness. Imagine dynamic range as a scale, stretching from the soft glow of a candlelit room to the brilliance of a sunlit day. In simpler terms, dynamic range allows us to notice fine details in shadows and the brilliance of well-lit scenes in both videos and images.

Dynamic Range supported by SDR and HDR Displays

Dynamic Range supported by SDR and HDR Displays

Difference between HDR and SDR

| Aspect | HDR | SDR |

| Luminance | Offers a broader luminance range, resulting in brighter highlights and deeper black for more lifelike visuals. | Limited luminance range can lead to less dazzling bright areas and shallower dark scenes. |

| Color depth | Provides a 10-bit color depth per channel, allowing finer color gradations and smoother transitions between colors. | Offers a lower color depth, resulting in fewer color gradations and potential color banding. |

| Color space | Incorporates a wider color gamut like BT.2020, reproducing more vivid and lifelike colors. | Typically uses the narrower BT.709 color space, offering a more limited color range. |

| Transfer function | Utilizes the perceptual quantizer (PQ) as a transfer function, accurately representing luminance levels from 10,000 cd/m^2 down to 0.0001 nits. | Relies on a gamma curve for transfer function, which may not accurately represent extreme luminance levels. |

Metadata in HDR10

HDR10 utilizes the PQ EOTF, BT2020 WCG, and ST2086 + Max FALL + Max CLL static metadata. The HDR10 metadata structure follows the ITU Series H Supplement 18 standard for HDR and Wide Color Gamut (WCG). There are three HDR10-related Video Usability Information (VUI) parameters: color primaries, transfer characteristics, and matrix coefficients. This VUI metadata is contained in the Sequence Parameter Set (SPS) of the intra-coded frames.

In addition to the VUI parameters, there are two HDR10-related Supplemental Enhancement Information (SEI) messages. Mastering Display Color Volume (MDCV) and Content Light Level (CLL).

Mastering Display Color Volume (MDCV):

Mastering Display Color Volume (MDCV):

MDCV or “Mastering Display Color Volume” is indeed an important piece of metadata within the HDR10 standard, also known as ST2086. This metadata plays a significant role in ensuring that HDR content is displayed optimally on different HDR-compatible screens.- Max Content Light Level (MaxCLL):

MaxCLL specifies the maximum brightness level in nits (cd/m²) for any individual frame or scene within the content. It helps your display adjust its settings for specific, exceptionally bright moments. - Max Frame-Average Light Level (MaxFALL):

MaxFALL indicates the maximum frame-average brightness level in nits across the entire content, including the brightest frames. It ensures that your display can correctly reproduce the content’s overall brightness. MaxFALL complements MaxCLL by indicating the maximum frame-average brightness level across the entire content. It prevents excessive dimming or over-brightening, creating a consistent and immersive viewing experience. - Transfer function (EOTF – electro-optical transfer function):

The EOTF, often based on the ST-2084 PQ curve, dictates how luminance values are encoded in the content and decoded by your display. It ensures that brightness levels are presented accurately on your screen. EOTF defines how luminance values are encoded in the content and decoded by your display.

Sample HDR10 metadata parsed using ffprobe:

Future of HDR10 & competing HDR formats

The effectiveness of HDR10 implementation is closely tied to the quality of the TV used for viewing. When applied correctly, HDR10 enhances the visual appeal of video content. However, there are other HDR formats gaining popularity, such as HDR10+, HLG (Hybrid Log-Gamma), and Dolby Vision. These formats have gained prominence due to their ability to further enhance the visual quality of videos.

Competing HDR formats, like Dolby Vision and HDR10+, are gaining popularity due to their utilization of dynamic metadata. Unlike HDR10, which relies on static metadata for the entire content, these formats adjust brightness and color information on a scene-by-scene or even frame-by-frame basis. This dynamic metadata approach delivers heightened precision and optimization for each scene, ultimately enhancing the viewing experience. The rivalry among HDR formats is fueling innovation in the HDR landscape as each format strives to surpass the other in terms of visual quality and compatibility. This ongoing competition may lead to the emergence of new technologies and standards, further expanding the possibilities of what HDR can achieve.

To sum it up, HDR10 isn’t just a buzzword, it’s a revolution in how we experience visuals in any multimedia solution. It’s the technology that takes your screen from good to mind-blowingly fantastic. HDR10 is very popular because there are no licensing fees (compared to other HDR standards) and is widely adopted by many companies and there are lot of equipment out there already. So, whether you’re a movie buff, gamer, or just someone who appreciates the beauty of visuals, HDR10 is your backstage pass to a world of incredible imagery.

With continuous advancements in technology, we at MosChip Company, help businesses across various industries to provide intelligent media solutions involving the simplest to the most complex multimedia technologies. We have hands-on experience in designing high-performance media applications, architecting complete video pipelines, audio/video codecs engineering, audio/video driver development, and multimedia framework integration. Our multimedia engineering services are extended across industries ranging from Media and entertainment, Automotive, Gaming, Consumer Electronics.

About MosChip

MosChip Technologies Limited is a publicly-traded semiconductor and system design services company headquartered in Hyderabad, India, with 1000+ engineers located in Silicon Valley-USA, Hyderabad, and Bengaluru. MosChip has over a twenty-year track record in designing semiconductor products and SOCs for computing, networking, and consumer applications. Over the past 2 decades, MosChip has developed and shipped millions of connectivity ICs. For more information, visit moschip.com

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube