Regression Testing in CI/CD and its Challenges

The introduction of the (Continuous Integration/Continuous Deployment) CI/CD process has strengthened the release mechanism, helping products to market faster than ever before and allowing application development teams to deliver code changes more frequently and reliably. Regression testing is the process of ensuring that no new mistakes have been introduced in the software after the adjustments have been made by testing the modified sections of the code as well as the parts that may be affected by the modifications. The Software Testing Market size is projected to reach $40 billion in 2020 with a 7% growth rate by 2027. Regression testing accounted for more than 8.5 percent of market share and is expected to rise at an annual pace of over 8% through 2027 as per the reports stated by the Global Market Insights group.

The Importance of Regression Testing

Regression testing is a must for large-sized software development teams following an agile model. When many developers are making multiple commits frequently, regression testing is required to identify any unexpected outcome in overall functionality caused by each commit, CI/CD setup identifies that and notifies the developers as soon as the failure occurs and makes sure the faulty commit doesn’t get shipped into the deployment.

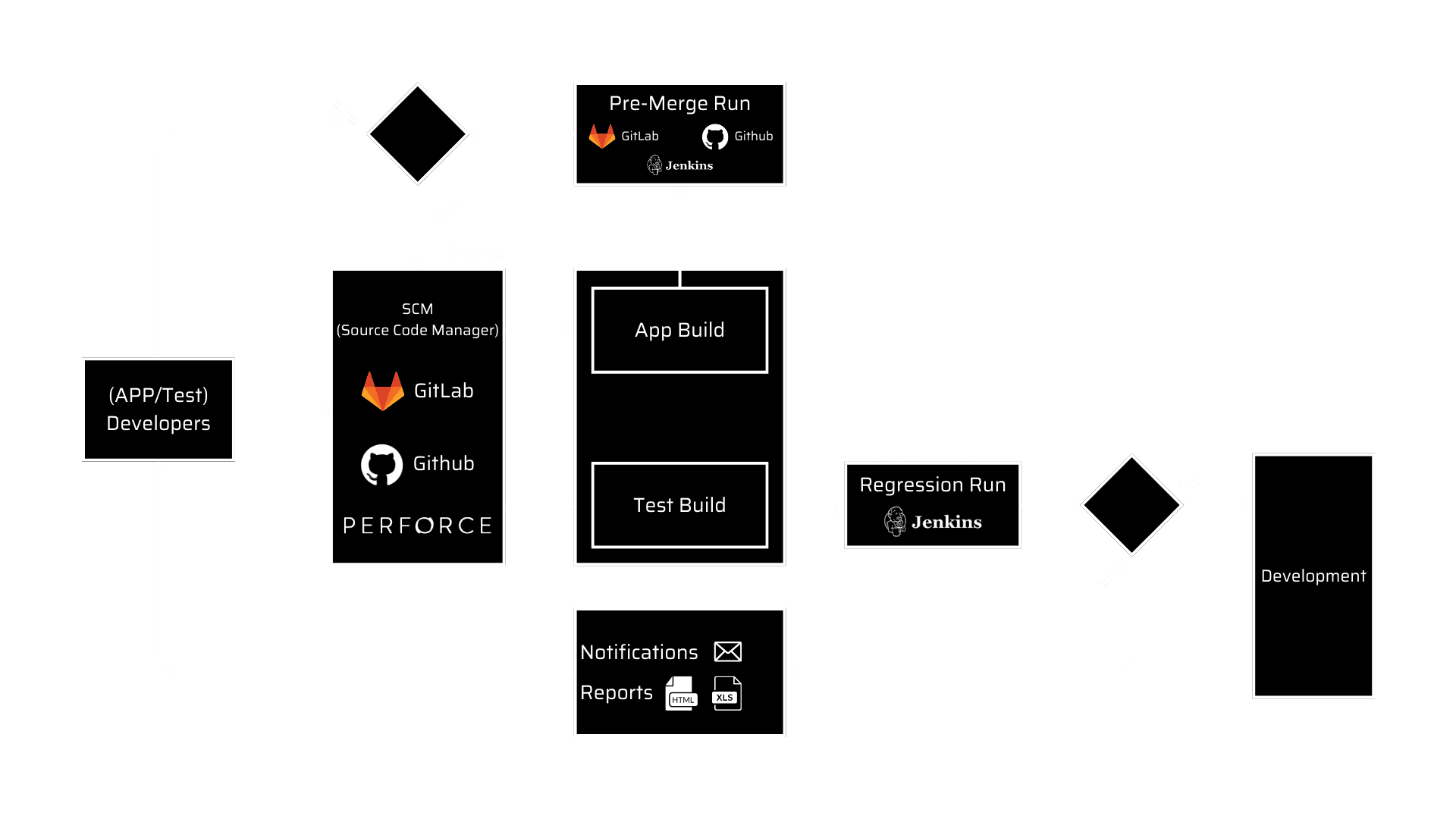

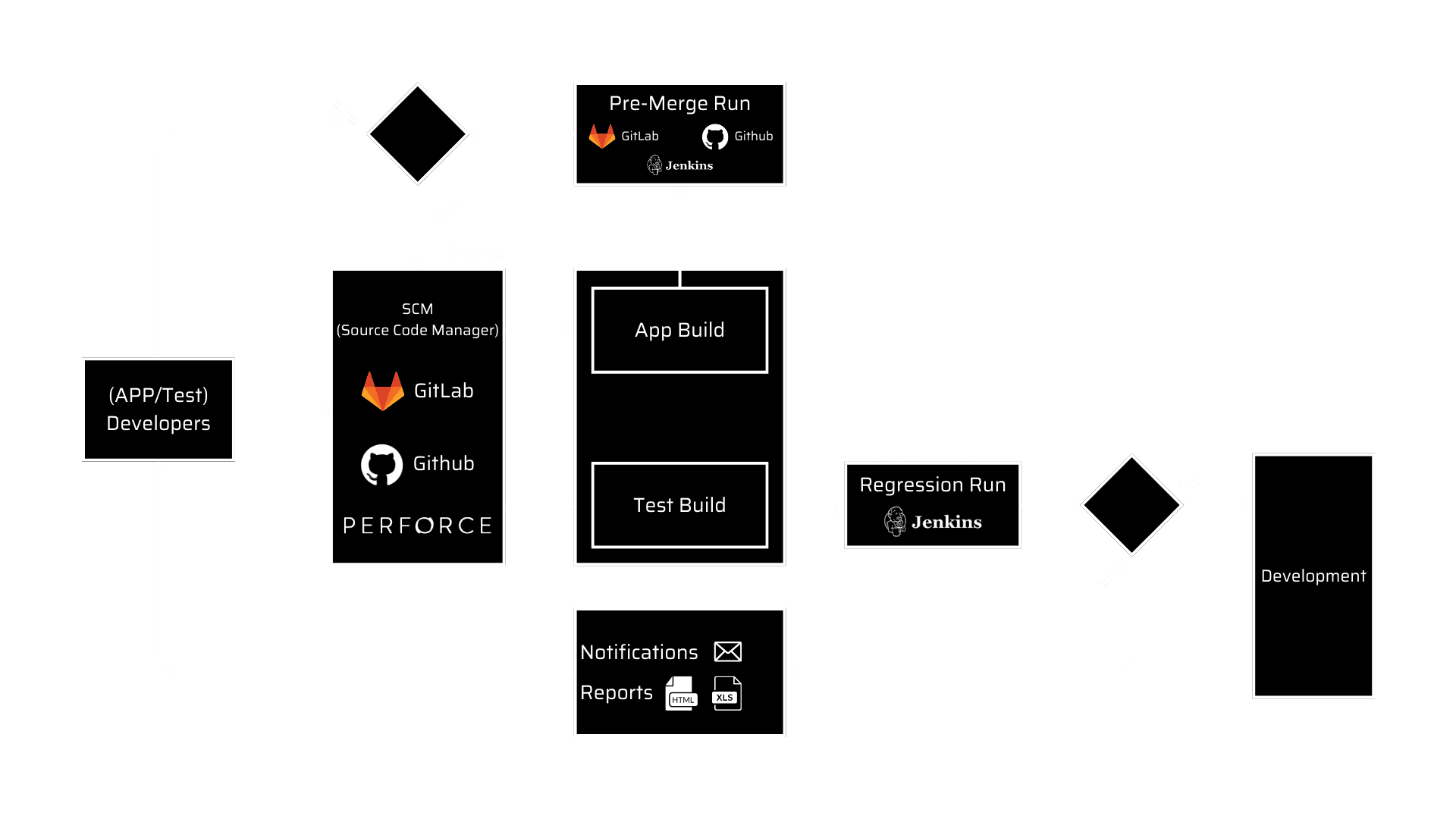

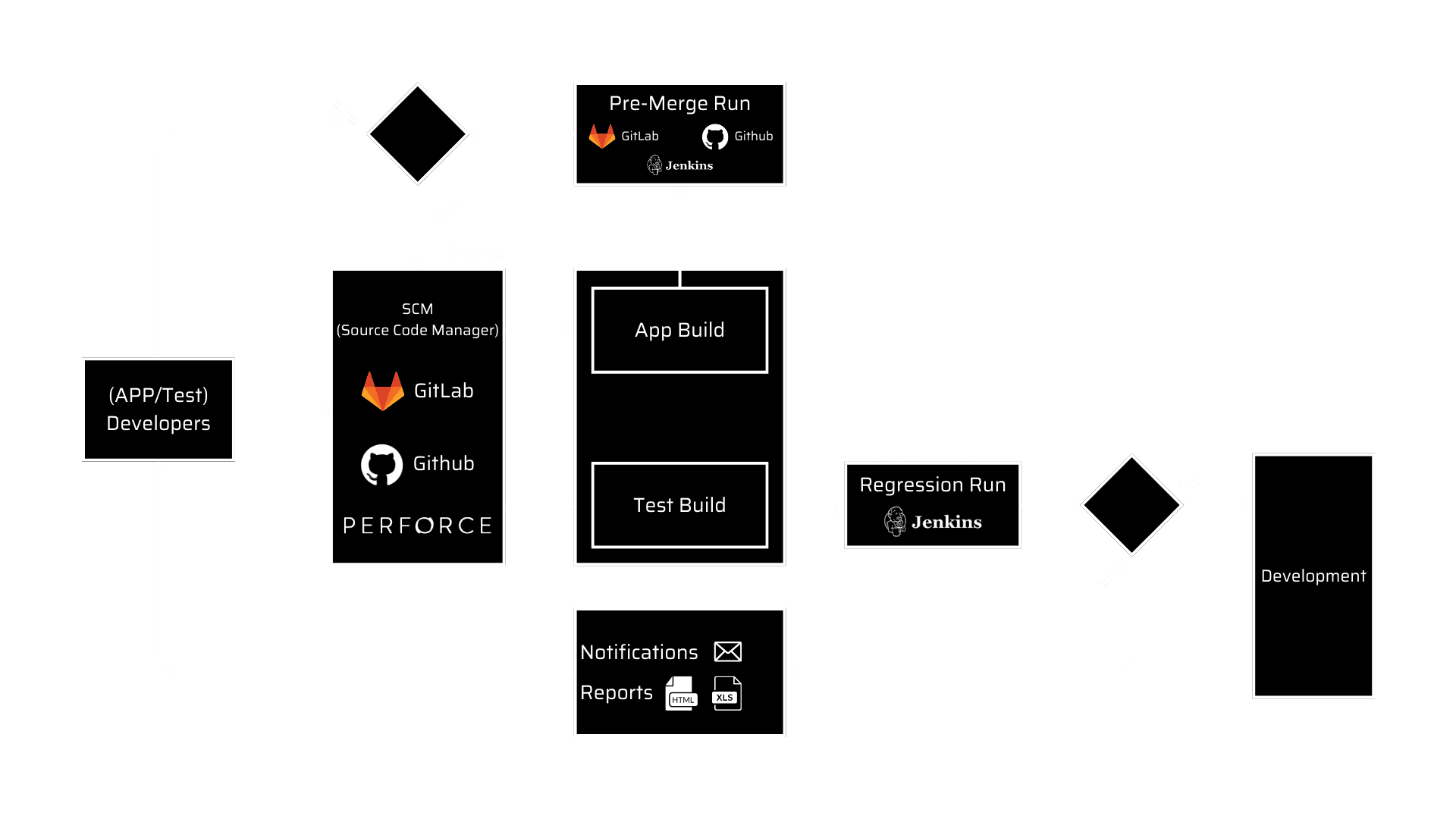

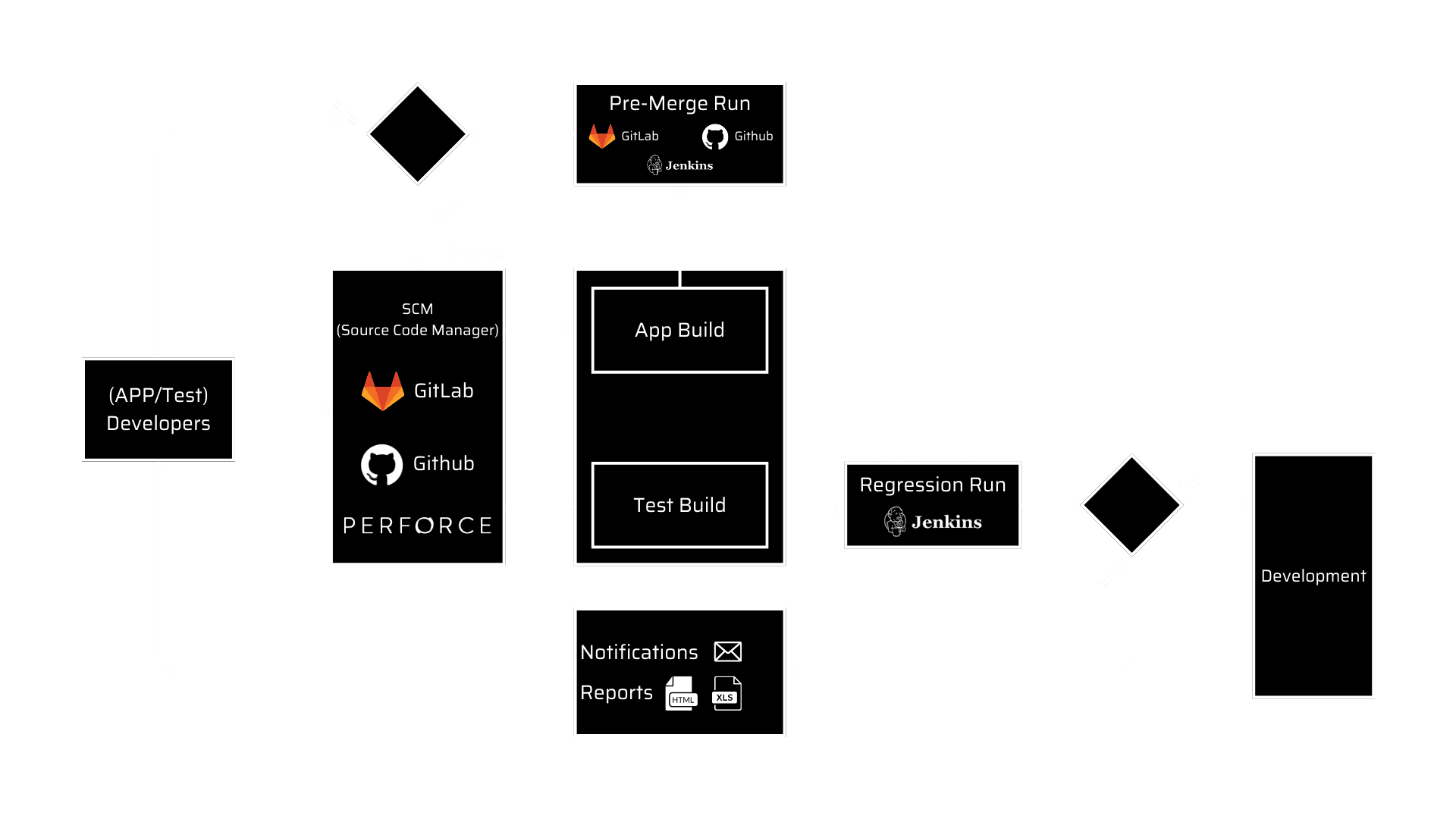

There are different CI/CD tools available, but Jenkins is widely accepted because of being open source, hosts multiple productivity improvement plugins, has active community support, and can set up and scale easily. Source Code Management (SCM) platforms like GitLab and GitHub are also providing a good list of CI/CD features and are highly preferred when the preference is to use a single platform to manage code collaboration along with CI/CD.

Different level of challenges needs to be overcome when CI/CD setup is handling multiple software products with different teams, is using multiple SCMs like GitLab, GitHub, and Perforce, is required to use a cluster of 30+ high configuration computing hosts consisting of various operating systems and handling regression job count as high as 1000+. With the increasing complexity, it becomes important to have an effective notification mechanism, robust monitoring, balanced load distribution of clusters, and scalability and maintenance support along with priory management. In such scenarios, the role of the QA team would be helpful which can focus on CI/CD optimization and plays a significant part in shortening the time to market and achieving the committed release timeline.

Let us see the challenges involved in regression testing and how to overcome them in the blog ahead.

Let us see the challenges involved in regression testing and how to overcome them in the blog ahead.

Effective notification mechanism

CI/CD tool like Jenkins provides plugin support to notify a group of people or a specific set of team members who are responsible to cause unexpected failures in the regression testing. Email notifications generated out of plugins are very helpful to bring attention to the underlying situation which needs to be fixed ASAP. But when there are plenty of such email notifications flooding the mailbox, it becomes inefficient to investigate each of them and has a high chance of being missed out. To handle such scenarios, a Failure Summary Report (FSR) highlighting new failures becomes helpful. FSR can further have an executive summary section along with detailed summary sections. Based on the project requirement, one can integrate JIRA, Jenkins links, SCM commit links, and time stamps to make it more useful for developers as the report will have all required references in a single document. FSR can be generated once or multiple times a day based on project requirements.

Optimum use of computing resources

When CI/CD pipelines are set up to use a cluster of multiple hosts with high computing resources, it is expected to have a minimum turnaround time of a regression run cycle with maximum throughput. To achieve this, regression runs need to be distributed correctly across the cluster. Workload management and scheduler tools like IBM LSF, and PBS can be used to run the jobs concurrently based on available computing resources at a given point in time. In Jenkins, one can add multiple slave nodes to distribute jobs across the cluster to minimize the waiting time in the Jenkins queue, but this needs to be done carefully based on available computing power after understanding the resource configuration of slave hosting servers, if not done carefully can result into node crash and loss of data.

Resource monitoring

To support the growing requirement of CI/CD, while scaling one can easily be missed to consider the disk space limitations or cluster resource limitations. If not handled properly, it results in CI/CD node crashes, slow executions, and loss of data. If such an incident happens when a team is approaching an import deliverable, it becomes difficult to meet the committed release timeline. Robust monitoring and notification mechanism should be in place to avoid such scenarios. One can-built monitoring application which continuously monitors the resources of each computing host, network disk space, and local disk space and raises a red flag when the set thresholds are crossed.

Scalability and maintenance

When regression job count grows to many 1000+, it becomes challenging to maintain them. A single change if manually needs to be done in many jobs becomes time-consuming and error-prone. To overcome this challenge, one should opt for a modular and scalable approach while designing test procedure run scripts. Instead of writing steps in CI/CD, one can opt to use SCM to maintain test run scripts. One can also use Jenkins APIs to update the jobs from the backend to save manual efforts.

Priority management

When regression testing of multiple software products is being handled in a single CI/CD setup, priority management becomes important. Pre-merge jobs should get prioritized over post-merge jobs, this can be achieved by running pre-merge jobs on a dedicated host by providing separate Jenkins slave and LSF queue. Post-merge Jenkins jobs of different products should be configured to use easy-to-update placeholders for Jenkins slave tags and LSF queues such that priorities can be easily altered based on which product is approaching the release.

Integration with third-party tools

When multiple SCMs like GitLab/GitHub and issue tracking tools like JIRA are used, tacking commits, MRs, PRs, and issue updates help the team to be in sync. Jenkins integration with GitLab/GitHub helps in reflecting pre-merge run results into SCM. By integrating an issue tracker like JIRA with Jenkins, one can create, and update issues based on run results. With SCM tools and JIRA integration, issues can be auto-updated on a new commit and PR merges.

Not only must regression test plans be updated to reflect new changes in the application code, but they must also be iteratively improved to become more effective, thorough, and efficient. A test plan should be viewed as an ever-evolving document. Regression testing is critical for ensuring high quality, especially as the breadth of the regression develops later in the development process. That’s why prioritization and automation of test cases are critical in Agile initiatives.

At MosChip, we offer Quality Engineering Services for both software and embedded devices to assist companies in developing high-quality products and solutions that will help them succeed in the marketplace. Embedded and product testing, DevOps and test automation, Machine Leaning Application/Platform testing and compliance testing are all part of our comprehensive QE services. STAF, our in-house test automation framework, helps businesses test end-to-end products with enhanced testing productivity and a faster time to market. We also make it possible for solutions to meet a variety of industry standards, like FuSa ISO 26262, MISRA C, AUTOSAR, and others.

About MosChip:

MosChip has 20+ years of experience in Semiconductor, Embedded Systems & Software Design, and Product Engineering services with the strength of 1300+ engineers.

Established in 1999, MosChip has development centers in Hyderabad, Bangalore, Pune, and Ahmedabad (India) and a branch office in Santa Clara, USA. Our embedded expertise involves platform enablement (FPGA/ ASIC/ SoC/ processors), firmware and driver development, BSP and board bring-up, OS porting, middleware integration, product re-engineering and sustenance, device and embedded testing, test automation, IoT, AIML solution design and more. Our semiconductor offerings involve silicon design, verification, validation, and turnkey ASIC services. We are also a TSMC DCA (Design Center Alliance) Partner.

Stay current with the latest MosChip updates via LinkedIn, Twitter, FaceBook, Instagram, and YouTube