Understanding the Deployment of Deep Learning algorithms on Embedded Platforms

Embedded platforms have revolutionized the way we interact with technology in our daily lives. These deep learning algorithm-equipped platforms have created an endless number of opportunities by facilitating the use of intelligent applications, autonomous systems, and smart devices. It is imperative to implement deep learning algorithms on embedded platforms. It entails tuning and modifying deep learning models to function well on embedded systems with limited resources, like CPUs, FPGAs, and microcontrollers. To lower the model size and computational requirements without compromising performance, this deployment process frequently calls for the use of model compression, quantization, and other techniques.

The embedded systems market is predicted to grow at a rapid rate worldwide and reach USD 170.04 billion in 2023. Precedence Research survey indicates that it will probably keep growing, with estimates putting it at around USD 258.6 billion by 2032. For the years 2023 to 2032, a Compound Annual Growth Rate (CAGR) of about 4.77% is projected. The market analysis yields several important insights. With 51% of the revenue share overall in 2022, North America was the leading region, followed by Asia Pacific with a sizable share of 24%. Regarding hardware platforms, the microprocessor segment accounted for 22.3% of revenue share in 2022, while the ASIC segment held a significant market share of 31.5%.

In contrast to conventional computing systems, embedded platforms have constrained memory, processing power, and energy resources. Therefore, careful consideration of hardware limitations and trade-offs between accuracy and resource utilization are necessary when deploying deep learning algorithms on these platforms.

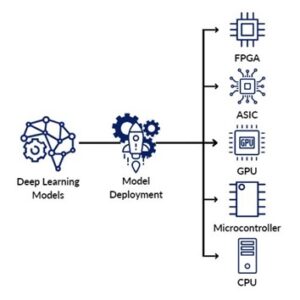

As part of the deployment, the trained deep learning model is formatted to work with the target embedded platform. This entails optimizing the model for particular hardware accelerators or libraries or converting it to a format specific to a framework.

Furthermore, using hardware acceleration techniques like GPU acceleration, specialized neural network accelerators, or custom hardware designs like FPGAs or ASICs is frequently necessary when deploying deep learning algorithms on embedded platforms. The inference speed and energy efficiency of deep learning algorithms on embedded platforms can be greatly increased by these hardware accelerators. The following are typical examples of deep learning algorithms being deployed on embedded platforms.

Optimizing deep learning models for embedded development

Deep learning algorithms must be carefully optimized and adapted before being deployed on embedded platforms. Techniques for model quantization, pruning, and compression help lower the computational load and size of the model without compromising functionality.

Hardware considerations for embedded deployment

Deploying embedded systems successfully requires an understanding of their specific hardware limitations. It is necessary to carefully analyze factors like memory capacity, processing speed, and energy restrictions. The key to achieving maximum performance and efficiency is choosing deep learning models and architectures that make efficient use of the target embedded platform’s resources.

Converting and adapting models for embedded systems

One crucial step in the deployment process is converting trained deep learning models into formats that work with embedded systems. It’s common practice to use framework-specific formats like TensorFlow Lite or ONNX. Moreover, models can be modified to take advantage of custom designs like FPGAs or ASICs, or specialized hardware accelerators like GPUs or neural network accelerators, to greatly increase inference speed and energy efficiency on embedded platforms.

Real-time performance and latency constraints

In the field of embedded systems, low latency and real-time performance are essential. To ensure that the inference process is carried out quickly and effectively, deep learning algorithms must comply with the timing specifications of individual applications. Thorough optimization and fine-tuning are necessary to strike a balance between the limited resources of embedded platforms and the demands of real-time.

An iterative refinement process might be required if the deployed model doesn’t meet the required performance or resource constraints. This may entail additional model optimization, hardware fine-tuning, or algorithmic modifications to enhance the effectiveness or efficiency of the deep learning algorithm in use.

To make sure that the deployed deep learning algorithm performs well on the embedded platform, it is crucial to consider variables like real-time requirements, latency constraints, and the particular requirements of the application throughout the deployment process.

Tools and frameworks for implementing deep learning algorithms

To make deep learning algorithms easier to implement on embedded platforms, several frameworks and tools have been developed. Among the well-liked options are TensorFlow Lite, PyTorch Mobile, Caffe2, OpenVINO, and the ARM CMSIS-NN library, which offer optimized libraries and runtime environments for effective execution on embedded devices.

Interested to Know More?

Let’s look at a few scenarios where deploying deep learning models on embedded edge platforms makes sense.

- Autonomous Vehicles: Deep learning techniques like Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are used to train computer vision algorithms that are a major component of autonomous vehicles. These systems analyze images from cameras installed on self-driving cars to identify objects, such as cyclists riding, parked cars on curbsides, and pedestrians crossing the street, and then trigger the autonomous vehicle to take appropriate action.

- Health Care and Remote Monitoring: The field of deep learning in healthcare is expanding quickly. Wearable sensors and devices, for example, use patient data to provide real-time insights into a range of health metrics, such as blood pressure, heart rate, blood sugar levels, and overall health status. Utilizing deep learning algorithms, these technologies evaluate and decipher the gathered data, yielding important insights for patient condition monitoring and management.

Future trends and advancements

The use of deep learning algorithms on embedded platforms will likely see exciting developments in the future. AI at the edge, or edge computing, will allow for real-time decision-making and lower latency. The possibilities of embedded AI are further expanded by integrating deep learning with Internet of Things (IoT) devices. We also expect customized hardware designs that offer improved performance and efficiency for deep learning algorithms on embedded platforms.

Deep learning algorithms must be deployed on embedded platforms using an organized procedure that takes hardware limitations into account, optimizes models, and meets real-time performance needs. By using this procedure, companies can use AI to drive innovation, streamline operations, and provide outstanding goods and services on systems with limited resources. In today’s AI-driven world, adopting this technology enables businesses to unlock new opportunities and achieve sustainable growth and success.

Moreover, the effective execution of the inference process depends on the real-time performance requirements and latency constraints that must be considered when deploying deep learning algorithms on embedded platforms.

MosChip specializes in designing intelligent embedded systems, smart edge devices, and AIML applications based on computer vision, cognitive computing, deep learning, and more. We can contribute at any stage of the ML lifecycle, right from data augmentation, ML model design, testing, and deployment on edge and cloud. Connect with our AIML experts for your next-gen embedded solution design.